The ultimate golden release upgrade guide

How to get from dCache 2.6 to dCache 2.10

AuthorGerd Behrmann <behrmann@ndgf.org>

About this document

The dCache golden release upgrade guides aim to make migrating between golden releases easier by compiling all important information into a single document. The document is based on the release notes of versions 2.7, 2.8, 2.9 and 2.10. In contrast to the release notes, the guide ignores minor changes and is structured more along the lines of functionality groups rather than individual services.

Although the guide aims to collect all information required to upgrade from dCache 2.6 to 2.10, there is information in the release notes not found in this guide. The reader is advised to at least have a brief look at the release notes found at dCache.org.

There are plenty of changes to configuration properties, default values, log output, admin shell commands, admin shell output, etc. If you have scripts that generate dCache configuration, issue shell commands, or parse the output of shell commands or log files, then you should expect that these scripts need to be adjusted. This document does not list all those changes.

About this release

dCache 2.10 is the fifth golden (long term support) release of dCache. The release will be supported at least until summer 2016. This is the recommended release for the second LHC run.

As always, the amount of changes between this and the previous golden release is staggering. We have tried to make the upgrade process as smooth as possible, while at the same time moving the product forward. This means that we try to make dCache backwards compatible with the previous golden release, but not at the expense of changes that we believe improve dCache in the long run.

The most visible change when upgrading is that most configuration properties have been renamed, and some have changed default values. Many services have been renamed, split, or merged. These changes require work when upgrading, even though most of them are cosmetic - they improve the consistency of dCache and thus ease the configuration, but those changes do not fundamentally affect how dCache works.

dCache pools from version 2.6 to 2.9 can be mixed with services from dCache 2.10. This enables a staged roll-out in which non-pool nodes are upgraded to 2.10 first, followed by pools later. It is however recommended to upgrade to the latest version of 2.6 before performing a staged roll-out.

Upgrading directly from versions prior to 2.6 is not officially supported. If you run versions before 2.6, we recommend upgrading to 2.6 first. That is not to say that upgrading directly to 2.10 is not possible, but we have not explicitly tested nor documented such an upgrade path.

Downgrading after upgrade is not possible without manual intervention: In particular database schemas have to be rolled back before downgrade. If pools use the Berkeley DB meta data back-end, these need to be converted to the file back-end before downgrading and then back to the Berkeley DB back-end after downgrade.

Incompatibilities

Upgrading from 2.6 to 2.10 will require adjustments to the dCache configuration. Most changes are described in detail in the following sections, but for the impatient, the incompatible changes include:

- Most configuration properties have been renamed. Although most old property names can still be used, some are no longer supported. Upon upgrade these have to be changed in the dCache configuration.

- Several service definitions have changed. In particular, flavors of DCAP, FTP and NFS doors have been collapsed, and several redundant services have been removed. The layout files instantiating such services have to be updated.

- The

classicpool manager partition type has been removed.wassor another pool selection algorithm must be used instead. If you relied on theclassicpartition type, you have to manually adjustpoolmanager.confduring upgrade. - The

wrandompartition type has been removed. The same behavior may be achieved with thewasspartition type. - Several default values for configuration properties have changed. Although we believe the new defaults are better, you should review the changes while upgrading.

- The space manager database schema has changed. It will be updated on upgrade, which may take some time on large databases. Previous versions of dCache are not forward compatible with the new schema and the schema has to be explicitly rolled back before downgrading dCache.

- The Chimera database schema has changed. It will be updated on upgrade, which may take some time on large databases. Downgrade is not possible without rolling back the schema changes.

- The

chimera-cliutility has been replaced bychimera. - In DCAP,

ctimeis now last attribute change time (like in POSIX) rather than creation time. - The space manager admin command line interface has been rewritten, with several commands being removed, replaced, or modified.

- The semantics of implicit space reservations and space reservations for non-SRM transfers has changed.

- If the NFS door and PnfsManager are hosted in the same domain then

the layouts file must have the

pnfsmanagerservice before thenfsservice. - The new property

dcache.upload-directorymay have to be defined on upgrade. Read the section on thesrmservice for further details. - An internal change to how we implement dCache services in the Spring framework breaks compatibility with third party plugins providing custom services implemented with Spring. Plugin providers should update their plugin to match the changes in b7234ee.

- SRM uploads that are not finalized by submitting

an

srmPutDonewill be deleted. ThesrmPutDonemessage is required by the SRM standard and standard compliant clients will not have a problem with this. The file is not available for reading untilsrmPutDonehas been issued. - IPv6 support is enabled by default.

- Tape integration was rewritten. Classic HSM scripts continue to be supported, however the pool setup file should be regenerated after upgrade.

- The HSM cleaner is enabled by default.

- Kerberos uses values in

/etc/krb5.confby default. If bothkerberos.realmandkerberos.key-distribution-center-listare set then custom values are used. - In billing log files, transfer-specific information is written as a colon-separated list of items. For HTTP and xrootd, the format has changed, with a colon now separating the protocol version information and the client IP address. The output for HTTP and xrootd is now consistent with information recorded for other protocol transfers.

WARNING By default dCache

used to not allow overwriting of files using ftp or srm. In order to

be standards compliant this has been changed. The default value

for srm.enable.overwrite and

ftp.enable.overwrite is true. For srm, files

are not overwritten unless explicitly requested by the client.

Services

This section describes renamed, collapsed, split, and removed services. A summary of the changes is contained in the following table.

| Service | Alternative | Configuration / Remark |

|---|---|---|

| gridftp | ftp | ftp.authn.protocol = gsi |

| kerberosftp | ftp | ftp.authn.protocol = kerberos |

| dcap | dcap | dcap.authn.protocol = plain dcap.authz.anonymous-operations = READONLY |

| gsidcap | dcap | dcap.authn.protocol = gsi |

| kerberosdcap | dcap | dcap.authn.protocol = kerberos |

| authdcap | dcap | dcap.authn.protocol = auth |

| nfsv3 | nfs | nfs.version = 3 |

| nfsv41 | nfs | nfs.version = 4.1 |

| acl | pnfsmanager | pnfsmanager.enable.acl = true |

| dummy-prestager | pinmanager | |

| webadmin | httpd | |

| hopping | hoppingmanager | |

| srm-loginbroker | loginbroker | srm-loginbroker can be removed from the layout |

| gsi-pam | gplazma | most likely you can drop this service from the layout |

Merged and split services

In previous releases some protocols were implemented as several doors, eg DCAP existed as both a plain, Kerberos and GSI doors, same for FTP, and NFS existed as both an NFS 3 and an NFS 4 door (and the NFS 4 version confusingly was able to serve NFS 3 too). Most properties used by these doors were used by all variants. Other protocols, such as HTTP and xrootd, only had a single door with the authentication scheme being selectable through a configuration property.

To improve consistency, variants of a door have now been collapsed into a single service. A configuration option allows the authentication type or NFS version to be configured.

Specifically, the services ftp, gridftp,

and kerberosftp have been collapsed into the service

ftp with the configuration option

ftp.authn.protocol setting the authentication

protocol. The services dcap, gsidcap,

kerberosdcap, and authdcap have been

collapsed into the service dcap with the configuration

option dcap.authn.protocol setting the authentication

protocol. The services nfsv3 and nfsv41 have

been collapsed into the service nfs with the

configuration option nfs.version defining the NFS

version.

Removed services

The services acl, dummy-prestager, and

webadmin have been removed entirely. For several releases

these services did nothing more than print a warning that the

functionality has been embedded in other services.

The service gsi-pam has been removed. It was a legacy service that

did not serve any purpose.

The srm-loginbroker service has been removed. SRM now

registers directly with the regular loginbroker

service. Doors can be configured to register with specific

loginbroker instances if more flexibility is needed.

Renamed services

The hopping service has been renamed to

hoppingmanager.

Embedding gplazma

gPlazma has always had two modes of operation: As a standalone service or as a module in which it would be used directly by a door. In 1.9.12 the module-mode was replaced by an automatically created non-exported gPlazma instance running in the same domain as the door. Due to the way cell routing works, this meant that doors in that domain would use the local gPlazma instance.

The explicit module-mode has now been removed completely. Instead

one can achieve the same effect by manually adding a gPlazma instance

to a domain with doors and set gplazma.cell.export to

false. Such a gPlazma instance is not published to the rest of dCache

and will thus only be used by doors in the same domain.

The benefits of this approach are that it is much more obvious how things are put together, and such a local gPlazma instance can be configured like any other gPlazma instance. It also makes it possible to have other deployments, like two different local gPlazma instances with non-default cell names, one which can be used by some doors, and the other by other doors; or several exported gPlazma instances with non-default cell names, and some doors configured to use one and other doors to use the other instance. This could for instance be used to distinguish between internal and external doors, or maybe have a different gPlazma setup for admin and httpd login.

[testDomain] [testDomain/gplazma] gplazma.cell.export = false gplazma.cell.name = gPlazma-for-admin gplazma.configuration.file = /etc/dcache/gplazma-for-admin.conf [testDomain/admin] admin.service.gplazma = gPlazma-for-admin # The admin service now uses the gplazma service called 'gPlazma-for-admin', # which uses the gplazma-for-admin.conf configuration file. [testDomain/httpd] # httpd uses the main 'gPlazma' service.

Configuration

In dCache 1.9.12 the entire configuration system was rewritten. The

new system introduced a layered approach, with default files shipped

with dCache, a system wide configuration file,

dcache.conf, and a host configuration file, the layout

file.

At the time, most configuration properties remained unchanged, to ease transition to the new configuration system. Over time this has lead to an inconsistent mess of mixed naming schemes, inconsistent configuration property names, inconsistent units for expressing durations and timeouts, and inconsistent values for boolean properties.

The new configuration system introduced in dCache 1.9.12 also

introduced a scoping operator. This operator has led to a lot of

confusion, as even expert users didn’t understand its semantics. The

operator allowed the same property to have different default values

depending on the service that used it. The operator was most widely

used for defining a cell name using the common cell.name

property, as well as for TCP ports using the port

property.

Based on feedback at the 2013 dCache user workshop, we decided to

clean up the dCache configuration. The configuration system itself

stays mostly unchanged: There are still default files, a dcache.conf

file, and a layout file. Most properties have however been renamed.

To the extend possible, old property names still work, although they have been marked deprecated and are obsolete or forbidden in dCache 2.11. The scoping operator has been removed and replaced by a strict naming convention.

WARNING Because the scoping operator was removed, some properties that relied on the operator can no longer be supported and these must be changed on upgrade. Except for trivial deployments, upgrading from 2.6 to 2.10 cannot be done without changing at least a few properties.

Naming conventions

All properties now follow a strict and hierarchical naming

scheme. Property names are always lower case, a hyphen is used as a

word separator, and dot as a node separator in the naming hierarchy.

All properties used by a service are prefixed by the service type, eg,

all srm properties begin with srm. and all

pnfsmanager properties with pnfsmanager..

Properties that begin with dcache. don’t belong to any particular

service: They are either not used by services (such as properties for

defining JVM options, or options for configuring an entire domain), or

they are used by multiple services. In the latter case, each service

will define a similarly named property prefixed with its own name;

e.g., dcache.net.listen defines the primary IP address services bind

to, however no service uses that property directly. Instead each

service defines a *.net.listen property that defaults to the value

of dcache.net.listen, such as

srm.net.listen=${dcache.net.listen}. This provides some of the same

flexibility as the scoping operator used to, as we can define per

service defaults.

No rule without an exception (except one): Chimera database

properties begin with chimera.. Since the Chimera

database are accessed by pnfsmanager, nfs,

and cleaner, we have chosen a common set of properties to

define the database connectivity. In a sense, Chimera is its own

service.

Time units

All properties that specify a time duration now have a sibling,

suffixed with .unit, that specifies the time unit; e.g.,

ftp.performance-marker-period specifies the time between two FTP

performance markers. ftp.performance-marker-period.unit specifies

the time unit of ftp.performance-marker-period (which happens to be

SECONDS). In a few cases the time unit is immutable, however in most

places it is configurable.

We recommend to explicitly define the unit if any property specifying a time duration is changed: In future releases we may decide to change the time unit. Making the unit explicit in your dCache configuration guards against such changes.

Booleans

In the past, boolean properties used a mixture of values like

true, false, enabled, disabled,

yes, no, etc. In some cases the properties involved

double negations, like billingDisableTxt which had to be

set to no to enable billing logging to flat text files.

Boolean properties now always use the values true and false, and are always phrased as enabling something.

Common properties

Some property naming patterns are reused in most services. This section describes some of them. In the following an asterix is used where the service type is to be inserted.

*.cell.nameDefines the name of the primary cell of the service.

*.cell.exportControls whether the cell name is exported as a well known cell. Well known cells must have a unique name throughout dCache, but have the benefit that they can be addressed using the cell name only rather than the fully qualified cell address (that also contains the domain name).

This can for instance be used to embed

gplazmain the same domain as a door without exporting the gPlazma name. Such agplazmainstance is only used by doors in that domain. This replaces the feature to use gPlazma as a module.*.service.SThe cell address of service

S. Egftp.service.pnfsmanageris the cell address of pnfsmanager as used by ftp doors. If the service has a well known name, only the well known name needs to be specified in the address. Otherwise the fully qualified cell address must be used. We tried to make all service names configurable through this mechanism.Combined with the ability to change cell names and not exporting services as well known, these changes provide lots of flexibility for advanced deployments. Any hard-coded service names left in dCache should be considered a bug to be reported.

*.service.S.timeoutThe communication timeout for requests to service

S.*.db.Database parameters; e.g., name, host, user, password, etc.

*.enable.Feature switches; e.g.,

*.enable.space-reservation.

any-of annotations

A new any-of annotation was added. It is used for properties that accept a list of comma separated values. This is reserved for use in default files shipped with dCache and plugins.

Layout files

The example layout files were updated and placed in the

directory /usr/share/dcache/examples/layouts instead

of /etc/dcache/layouts.

IMPORTANT RPM users who used to call their layout files single, head or pool should please check that RPM did not rename their layout file.

Databases

Schema management

Except for the srm and replica services,

all databases in dCache are managed through the liquibase

schema management library. This now also includes

the spacemanager service. The SQL scripts for creating

the Chimera schema by hand are no longer shipped with dCache.

Schema changes are automatically applied the first time a service

using the schema is started. Such changes may also be applied manually

before starting dCache using the dcache database update

command. It will update the schemas of all databases used by services

configured on the current node.

For even more hands-on schema management, the dcache database

showUpdateSQL command was added. It outputs the SQL that would

be executed if dcache database update was executed. The

emitted SQL may be applied manually to the database, thereby updating

the schema.

Before downgrading dCache, it is essential that schema changes are

rolled back to the earlier version. This needs to be

done before installing the earlier version of dCache.

Schema changes can be rolled back using the dcache database

rollbackToDate command. It is important to remember the date

the schema changes where applied in the first place so that it can be

rolled back to the version prior to the upgrade.

NOTE The nfs

service does not automatically apply schema changes to Chimera - only

the pnfsmanager service does that. nfs will

however check whether the Chimera schema is current and refuse to

start if not. One consequence of this is that if nfs

and pnfsmanager run in the same domain,

either nfs must be placed after pnfsmanager

in the layout file or the changes must be applied manually

using dcache database update.

Schema changes

Chimera

Several changes have been made to the Chimera schema. For large databases applying these changes will be a lengthy operation. If this is a concern, we recommend doing a test migration on a clone of the database.

The uri_encode and uri_decode stored

procedures have been introduced. These are used

by Enstore.

Space manager

Several changes have been made to the space manager schema. For large databases applying these changes will be a lengthy operation. If this is a concern, we recommend doing a test migration on a clone of the database.

Third party alterations to the schema should be carefully inspected

before upgrading, and possibly be rolled back if they conflict with

the schema changes. Workarounds for outdated statistics for

the srmspacefile table are no longer necessary.

The database schema contains several fields which are aggregates of other fields. In earlier versions, these aggregated fields were maintained by dCache, adding considerable overhead in the form of database round trips and locks. This logic has now been embedded in the database in the form of database triggers. Be aware that these triggers are essential and must be restored if the database is restored from backup.

Many other changes have been made to the schema. Any third party queries against this database will most likely require updates.

Connection pool

The BoneCP database connection pool library has been replaced with HikariCP. HikariCP is more maintained, smaller, faster and more robust.

HikariCP has slightly different controls for adjusting the database connection pool. While BoneCP partitioned connections, HikariCP has no such concept. HikariCP also maintains a minimum number of idle connections; this is in contrast to the minimum number of connections that BoneCP maintains. Sites that have tuned their data connections limits should take note of these differences and adjust their configuration accordingly.

The srm service has been updated to use HikariCP in

favor of an ad-hoc connection pool.

JDBC 4 drivers

The *.db.driver configuration properties have been

marked obsolete. The proper driver is now automatically discovered

from the JDBC URI. Only JDBC 4 compliant database drivers are

supported (this is only relevant if you install a custom JDBC

driver).

Name space

Set group ID

The setgid bit on directories has been implemented in

Chimera. This bit is useful for shared directories in which files and

sub-directories have to inherit the group ownership of the parent

directory. Using the set-gid bit is more flexible than relying on the

pnfsmanager.inherit-file-ownership property.

Creation time

Chimera has been updated to track the creation time of files in addition to the attribute change time. The creation time for existing files is obviously unknown. When updating the database schema, existing files will receive a creation time being the oldest of the mtime, ctime, and atime fields.

DCAP was updated to report last attribute change time in the ctime field of a stat request. This was the intended semantics from the beginning and is what ctime means in the POSIX standard, but in dCache ctime has in some places been confused with creation time.

Command line interface

The Chimera command-line tool chimera-cli has been

replaced with chimera. The new tool offers an interactive

shell when invoked without arguments and can execute scripts. It can

also be invoked with a command on the command-line like the

old chimera-cli tool. Note that some commands have a

different argument order compared to the old tool (they now use the

same order as used by similar tools defined in the POSIX

standard).

To learn about the supported commands, start the Chimera shell by running

chimera and then type help.

Lookup permission check

Validation of lookup permissions on the full path is enabled by

default. Full path permission check may result in access to files and

directories, that was allowed before, to be denied. Sites are

advised to check the permission settings on directories on upgrade, or

to disable the POSIX compliant full path permission check by flipping

the property

pnfsmanager.enable.full-path-permission-check.

Authn & Authz

Password authentication

A gPlazma password authentication plugin

called htpasswd was added supporting the common htpasswd

file format used by many web servers. Only the MD5 hash format is

currently supported. Use the

htpasswd utility available on most operating systems to

create and manage htpasswd files.

LDAP

Modified the gPlazma ldap plugin to fail in the map-

or session-phases of a login if no user name is supplied or if there is

no mapping for this user. Please check your gPlazma configuration if

you use the ldap plugin.

Previous versions of the plugin hard coded values for the home and

root directory. The root directory was set to / and the

home directory was set to the home directory as stored in the LDAP

server. The behavior is now configurable through two new

properties, gplazma.ldap.home-dir and

gplazma.ldap.root-dir. For those two properties it is

possible to use keywords that will be substituted by the corresponding

attribute value in LDAP. For example %homeDirectory% will

be replaced by the value of attribute homeDirectory in the

people tree.

Kerberos

dCache will use the kerberos configuration

in /etc/krb5.conf by default. The existing configuration

is still supported, but the system configuration file is now taken as

the default.

GridSite delegation

Support for GridSite delegation v2.0.0 was added. The endpoint is

run as part of the srm service and has the

path /srm/delegation. The GridSite delegation endpoint

allows a user to delegate a credential, discover the remaining

lifetime of any delegated credential and destroy delegated

credentials. Those credentials delegated via GSI (typically as part of

an SRM operation) will have the delegated ID of

gsi. Using this, a client may query the lifetime of any already

delegated credential and delete credentials delegated via SRM

operations.

A delegation client that allows scripted and console-based interaction with GridSite endpoints has been implemented. The client is released independently, as part of the dCache srm client package.

Logging

If you have customized your logback.xml, it is

important that you reset the file back to its default when upgrading

to dCache 2.10. You can reapply local modifications to the updates

default after upgrade.

The biggest change to logging is the addition of an access log. The

log is created for every dCache domain, although currently only

the srm, ftp, and webdav

services support it. These log files contain an entry for every

protocol level request being made and are thus analogous to the common

access logs generated by most web servers. The log contains a

timestamp, the client address, and various protocol specific fields

describing the request and response. For complex protocols like SRM,

the access log cannot possibly contain the entire request. The log

does contain the SRM request ID and the entire request can be

retrieved through the admin shell. The log is created

in /var/log/dcache/ and is named after the domain

followed by the string .access. The log is rotated and

compressed daily and kept for thirty days (this is configurable

through logback).

Most services have had their log output improved. In particular

the srm and spacemanager services are a lot

less noisy. For srm the session identifier has been

shortened.

Admin shell

SSH

SSH 1 support has been removed from the admin

door. This protocol is known to be insecure. The admin

door now requires SSH 2 and defaults to port 22224.

For password based authentication, the door relies on gPlazma. Thus

a password authentication plugin (eg kpwd

or htpasswd) must be set up. The account must have GID 0

to allow login to the admin door (the GID is

configurable). Alternatively, we recommend key-based

authentication.

The use of GID 0 also applies to the webadmin

service. Users with this GID have admin privileges

in webadmin. In the past webadmin used GID

1000, but the default has been changed.

pcells

A pcells SSH subsystem was added. The current releases of pcells support this subsystem.

Help

dCache is in the middle of a transition between two frameworks for implementing the admin shell commands. The newer of these frameworks provides much nicer help output. The format is similar to classic man pages. In ANSI terminals the output uses highlighting to make the output easier to glance over.

While transitioning from one implementation to the other, we have

added more documentation for each command. This is accessed

using help command.

Pool selection

Partition types

Pool selection is controlled by pool manager partitions. Different partition types provide different pool selection algorithms. This section outlines changes to those partition types.

- classic

-

Some years ago, a new load balancing scheme called weighted available space selection (wass) had been introduced. Since dCache 2.2 this scheme was used by default for new installations. The old scheme, dubbed

classichas now been removed andwassis the new default partition type. The change has allowed quite some legacy code to be removed and enables future development. In particular, the concept of space cost is more or less gone from the entire code base.IMPORTANT Sites still using

classicmust manually updatepoolmanager.confto createwasspartitions instead. When switching fromclassictowass, it is important to resetcpucostfactorandspacecostfactor, as the interpretation of these parameters has changed. We suggest to use the default value of 1.0 for both parameters. - buffer

-

This new partition type was added and is intended for pools used as transfer buffers. This algorithm chooses a pool based on weighted random selection, with weights derived from the pools’ load only. Free space has no effect as all pools with sufficient space to store the file are considered. This partition type is suitable for pools which do not hold files permanently, such as import and export pools.

The

wasspartition type is not suited for this use case, aswassprioritizes balancing free space over load.The

bufferpartition type respects the mover cost factor and performance cost factor settings, using an interpretation similar towass. - wrandom

-

The

wrandompartition type has been removed. The same behavior may be achieved withwass.

Space cost

With the removal of the classic, the concept of space

cost is gone from dCache. This affects several admin shell

commands:

- Commands that could report space cost no longer do so.

- The

rebalance pgroupcommand ofpoolmanagerno longer accepts sc as a value for the-metricoption; free has been added instead.

The migration module no longer recognizes the

source.spaceCost and target.spaceCost variables, and

it no longer accepts best as a value for the

-select option.

Performance cost

For wass partitions, the algorithm for computing a

performance cost has been modified. The performance cost is used for

read pool selection.

In previous versions, tape queues (both flush and stage) have contributed to the performance cost the same way as any other mover queues. However, often the number of active flush and stage jobs on a pool have no relationship with the actual load incurred by these jobs: They only indicate how many concurrent instances of the HSM script to create, while the number of streams to and from disk is controlled by the number of tape drives available. Moreover, the number of queued flush jobs is misleading, as the pool has several flush queues not taken into account when computing the performance cost.

The cost calculation has been changed so that the maximum number of active HSM jobs no longer influences the algorithm. Instead, an HSM queue contributes with the cost 1 - 0.75^n, where n is the number of active jobs, unless the queue has queued jobs, in which case the contribution is 1.0. The final performance cost is the average of the contribution of each queue.

NOTE The performance cost is only used for read pool selection. For write pool selection (including which pool to stage a file to), the weighted available space selection algorithm is used.

Write pools

Error messages for when no write pools are found for a transfer have been improved. For FTP, these errors are now classified into transient and permanent errors with an appropriate error code.

Space management

dCache supports SRM style space reservations through the use of the

spacemanager service. This service has undergone almost a

complete rewrite. The new version should scale better, be easier to

use, and require less space in the database. Extensive changes have

been made to the admin shell interface and the configuration

properties of space manager. Please consult the build

in help output and

the spacemanger.properties file for details. The admin

shell commands accept a great many options that alter their behavior,

so make sure to read the help output offered by each of them. The new

commands no longer expose the auto-generated link group id and file id

database keys. Instead link groups are identified by their name, and

files by their PNFS ID or path.

Third party scripts accessing the database directly will have to be updated due to the extensive schema changes. The same goes for scripts accessing the admin shell interface, since the space manager commands and their output have been changed.

Despite the extensive changes, the basic concepts of space reservations in dCache have not changed much. The most important change is to how uploads outside space reservations interact with the space manager service. To understand these changes we first have to recall how space reservations are supported in dCache.

The set of pools in dCache is partitioned into non-overlapping link groups. Each link group is a collection of one or more links, and the pools accessible through those links provide the space of the link group. This space is managed by space manager. When pool manager is asked to select a write pool, it will either select among the links that are not in any link group (unmanaged space) or among the links within a particular link group (managed space). Space reservations are created within link groups. Link group capabilities and link group authorization control in which link group a particular reservation can be made and who can make it. Reservations created by the administrator are typically placed explicitly in a link group, while reservations made by end users through SRM are matched to link groups by space reservation parameters and the identity of the user.

When files are uploaded to an existing reservation, either by explicitly specifying the reservation as part of an SRM upload, or by binding the directory to a reservation using the WriteToken directory tag, the space manager can lookup the appropriate link group from the reservation and inject that into the write pool selection request before handing it over to pool manager. This way the file will be written to the pools on which the space was reserved.

The above applies equally to dCache 2.6 and 2.10. What has changed is how space manager deals with files that are not uploaded to an existing reservation. In dCache 2.6 two configuration properties controlled what would happen: The SRM could be configured to create a reservation on the fly prior to the upload. Once this implicit reservation was created, the rest of the upload would be as if done to an existing reservation. The other option was to configure space manager to do the same for non-SRM transfers. The reason for this apparent duplication in functionality boiled down to implementation details of the SRM service. Worse though was that enabling either option prevented uploads to the unmanaged space (the links not in any link groups). Thus enabling implicit reservation in SRM forced all SRM uploads to go into a link group. Similarly, enabling this option for space manager forced all non-SRM uploads to go into a link group. Transfers that would have succeeded with these options disabled could fail when enabling them.

The above problem has been resolved: There is now just a single

property in

spacemanager, spacemanager.enable.unreserved-uploads-to-linkgroups,

which controls the behavior for both SRM and non-SRM uploads. When

uploading a file outside any existing reservation, enabling this

property causes space manager to attempt to create a temporary

reservation for this file. If successful, the upload proceeds as if

this was a normal reservation. In case no such temporary reservation

can be made (either because the file cannot be served by any links in

link groups, or because the user isn't authorized to write to those

link groups), space manager falls back to using unmanaged space.

A related change is that only permanent reservations are published by the info service and the webadmin. This prevents that time limited (often implicit or short-lived) reservations bloat the output.

Nearline storage

The interface to nearline storage (tapes) has been rewritten. Nearline storages are now supported through drivers. These drivers can be packaged and shipped as dCache plugins. A driver that supports the classic HSM scripts is shipped with dCache and will be used by default for existing setups.

Nearline storage drivers solve many issues of classic HSM scripts:

A callout to an external script for every invocation was a performance issue, as such a callout to an external interpreter was associated with considerable overhead.

A callout to an external script was a tape efficiency issue, as it would limit the number of concurrent callouts due to allocating a process and four Java threads for every file. Thus one had to balance how many processes and threads the host could handle with how large batches one could submit to the tape system.

The stage queue was limited to available disk capacity, as the pool had to reserve space for the file before calling the script. Thus the tape reordering queue was limited by disk space.

A nearline storage driver is written in a JVM-supported language (for example, Java, Scala or Groovy) and is executed within dCache. This avoids the overhead of calling out to an external script. Request queuing is dealt with by the driver, which allows the driver to reorder requests in a way that optimizes tape access.

Since nearline storage request queuing is no longer part of dCache,

the maximum active requests limits are no longer reported to

poolmanager. For some drivers, such a limit may not even

exist. Pool selection has been updated to no longer rely on these

values.

The st, rh, and hsm family

of commands in the pool service have been updated. The

nearline driver is specified when defining a new nearline storage. The

new hsm create command is used to define the nearline

storage, while the hsm set command has been updated to

allow the driver to be configured.

script driver

dCache ships with a driver called script. This driver performs a

callout to the classic HSM integration script. The driver accepts a

number of arguments, settable through the hsm set command:

- -command

- The path to the script.

- -c:gets

- Maximum number of concurrent reads.

- -c:puts

- Maximum number of concurrent writes.

- -c:removes

- Maximum number of concurrent removes.

To provide backwards compatibility with existing setups, the script

driver is the default. During initialization, the rh set max active,

st set max active and rm set max active commands are translated to

configuration parameters for instances of the script driver. Support

for these commands is however deprecated and it is recommended that

the pool setup file is regenerated using the save command.

copy driver

The copy driver is similar to the classic hsmcp script. It copies

files between the pool and some other directory. It accepts a single

configuration property:

- -directory

- The path of the directory into which files are copied.

link driver

Similar to the copy driver, but creates hard links rather than

copying files. The directory in which hard links are created must be

on the same file system as the pool’s data directory. Like the copy

driver, the link driver only accepts a single configuration property:

- -directory

- The path of the directory within which hard links are created.

tar driver

This is an experimental driver, provided mostly as a demonstration right now. The driver bundles files into tarballs and stores those in a configurable directory. The driver does currently not support removing files.

- -directory

- The path of the directory within which tarball files are created.

The HSM cleaner is enabled by default. It used to be disabled by default because traditionally PNFS setups all had custom solutions for how to remove files from tape. Now that cleaning from tape is an integral part of dCache and PNFS is no longer supported, having the HSM cleaner work out of the box is a sensible default. It can be disabled by setting:

cleaner.enable.hsm = false

IPv6

dCache enables IPv6 by default. It still prefers to use IPv4 addresses on dual-stack machines (those with both IPv4 and IPv6 addresses) so the impact should be minor. The change implies that services will now also listen on IPv6 interfaces. To disable this, define

dcache.java.options.extra=-Djava.net.preferIPv4Stack=true

FTP Extensions for IPv6 (RFC 2428) have been implemented. These extensions are incompatible with GridFTP v2. To allow IPv6 FTP transfers to be redirected to pools, support for Globus delayed passive has been added. This is an alternative to using GridFTP v2 GETPUT. Delayed passive allows the data channel to be created directly between the client and the pool. In contrast to GridFTP v2, delayed passive supports IPv6. Note that current clients often default to disabling delayed passive, even when delayed passive is implemented. Without delayed passive, connecting with IPv6 will cause the data channel to be proxied by the door.

If the client connects to the door using IPv4 and the pool does not

support IPv4, then the data channel is proxied through the

door. Similarly, if the client connects to the door using IPv6 and the

pool does not support IPv6, then the data channel is proxied through

the door. This is the case even when the client is dual stacked. Like

with GridFTP v2, use of direct data channels is subject to

the ftp.proxy.on-passive

and ftp.proxy.on-active properties.

RFC 2428 is only supported on IPv6 connections. When clients connect over IPv4 we pretend not to support the IPv6 extensions. This is to avoid that IPv4 clients supporting RFC 2428 cause unnecessary proxying of the data channel.

NFS

NFS 4.0

Added support for NFS v4.0. Note that there is no parallel NFS (pNFS) extension for NFS v4.0; therefore, the NFS door will act as a proxy. This means that all data will flow through the door for clients using NFS v4.0.

dot commands

dot-commands are special file names that when opened trigger an action in dCache. These are used to access additional meta data and interact with dCache. dCache NFS supports dot commands. Most of these date back all the way to PNFS (the predecessor of Chimera), but a few new dot commands have been added in this release.

.(get)(filename)(checksum)

Retrieves all stored checksums for a file. The command takes the

forms .(get)(filename)(checksum)

or .(get)(filename)(checksums), and both return

a comma-delimited list of type:value pairs for all

checksums stored in the Chimera database.

$ cat '.(get)(dcache_2.6.5~SNAPSHOT-ndgf6_all.deb)(checksum)' ADLER32:3046b37d

.(fset)(filename)(pin)(duration)

Pins or unpins a file. Files which are not on disk will be staged

when pinned. The command takes the

form .(fset)(filename)(pin|stage|bringonline)(duration)[(SECONDS|MINUTES|HOURS|DAYS)].

The variants of the third argument are equivalent in effect. The

last argument is optional and defaults to SECONDS. A

duration value of 0 will unpin the file. The command does

not allow the user to pin the file indefinitely as the duration value

must be a positive integer.

$ touch '.(fset)(dcache_2.6.5~SNAPSHOT-ndgf6_all.deb)(pin)(60)'

$ cat '.(id)(dcache_2.6.5~SNAPSHOT-ndgf6_all.deb)'

00001E687A901C16407AB6DBD5F8FF7DF95B

$ ssh -p 22224 -l admin localhost

dCache Admin (VII) (user=admin)

[dcache] (local) admin > cd PinManager

[dcache] (PinManager) admin > ls 00001E687A901C16407AB6DBD5F8FF7DF95B

[32460414] 00001E687A901C16407AB6DBD5F8FF7DF95B by 0:0 2014-10-23 11:21:52 to 2014-10-23 11:22:52 is PINNED on pool1:PinManager-3e734bfc-4276-4395-85a8-76fa7ac1757e

total 1

Export options

The dCache NFS door exports file systems per the directives in

the /etc/exports. A couple of new options for use in this

file have been introduced.

all_squash

Added support for all_squash export option, e.q. all

users are mapped to nobody.

dcap and no_dcap

Added new export options dcap

and no_dcap. The former is the default while the latter

hides the .(get)(cursor) dot command that DCAP clients

use to detect an NFS-mounted dCache. Using no_dcap will

force DCAP clients to read data through NFS instead of through

DCAP.

Attribute caching

Server side attribute caching drastically improves performance for

some workloads. Three new attributes have been added to configure the

cache: nfs.namespace-cache.time, nfs.namespace-cache.time.unit

and

nfs.namespace-cache.size.

Pool resets

The pool reset id command has been added to

the nfs door to reset pool’s device id. This command is

used to recover from unknown failures: the Linux kernel may choose to

ban a pool (due to some real or imagined protocol violation) and send

IO through the nfs door. This command allows the door to

simulate a pool being rebooted, triggering the client to reassess its

opinion of the pool.

FTP

Control channel

Added support for the CREATE, UNIX.ctime

and UNIX.atime facts for the MLSD

and MLST commands

(see RFC 3659).

Improved compatibility with the ncftp client by adding a leading zero

to the value of the mode fact.

Added support for the MFMT, MFCT

and MFF commands as described in

this internet

draft to modify facts.

Added support for the HELP and SITE HELP

commands as defined

in RFC 959. This

improves compatibility with some clients.

Data channel

The listening socket of a passive FTP data channel is now bound to a single address rather than the wildcard address. The FTP protocol only allows a single address to be returned to the client, so there is no point in binding to the wildcard address. Binding to the wildcard address had the problem that it could succeed even if the port on the specific address returned to the client was already open. The change does have observable effects in that previously the pool would submit a hostname back to the door and the door would do a DNS lookup to find the address to send to the client. Now the pool sends an IP address.

Configuration

dCache user accounts can be configured with a personal root and home

directory. How these are interpreted depends on the door and protocol

used. For FTP, the account root directory forms the root of the name

space and the home directory is the initial directory of the FTP

session. For other doors, a configuration property usually defines a

directory to export as the root of that door, and the account root

directory is used as an additional authorization check to prevent

users from accessing any directory outside their root. This behavior

is now configurable for the FTP door using the new ftp.root

property. If left empty, the per account root is used as before. If

set to a path, that path is exported as the root of the door.

HTTP/WebDAV

Proxy mode

The webdav door allows uploads and downloads to be proxied through

the door as an alternative to redirecting the client to the pool. For

download we have used HTTP for the transfer between the door and the

pool, but for upload we used an FTP data channel. We did this because

originally the HTTP mover did not support upload. This has now been

changed such that proxied HTTP upload also uses HTTP between the door

and the pool. The primary admin visible change is that the TCP

connection is created from the door to the pool rather than from the

pool to the door. The configuration property

webdav.net.internal is now used as a hint for the pool to

select an interface facing the door. The property is respected by both

proxied downloads and proxied uploads.

WebDAV properties

Limited support for WebDAV properties has been implemented. WebDAV provides an extensible mechanism for reporting additional meta data about files and directories. These are known as properties and can be queried through the PROPFIND HTTP method - the same mechanism used to list WebDAV collection resources (directories in dCache). Currently only support for checksums, access latency and retention policy is provided.

An example follows.

$ curl -X PROPFIND -H Depth:0 http://localhost:2880/public/test-1390395373-1 \

--data '<?xml version="1.0" encoding="utf-8"?>

<D:propfind xmlns:D="DAV:">

<D:prop xmlns:R="http://www.dcache.org/2013/webdav"

xmlns:S="http://srm.lbl.gov/StorageResourceManager">

<R:Checksums/>

<S:AccessLatency/>

<S:RetentionPolicy/>

</D:prop>

</D:propfind>'

<?xml version="1.0" encoding="utf-8" ?>

<d:multistatus xmlns:cs="http://calendarserver.org/ns/" xmlns:d="DAV:" xmlns:cal="urn:ietf:params:xml:ns:caldav" xmlns:ns1="http://srm.lbl.gov/StorageResourceManager" xmlns:ns2="http://www.dcache.org/2013/webdav" xmlns:card="urn:ietf:params:xml:ns:carddav">

<d:response>

<d:href>/public/test-1390395373-1</d:href>

<d:propstat>

<d:prop>

<ns1:AccessLatency>ONLINE</ns1:AccessLatency>

<ns2:Checksums>adler32=6096c965</ns2:Checksums>

<ns1:RetentionPolicy>REPLICA</ns1:RetentionPolicy>

</d:prop>

<d:status>HTTP/1.1 200 OK</d:status>

</d:propstat>

</d:response>

</d:multistatus>

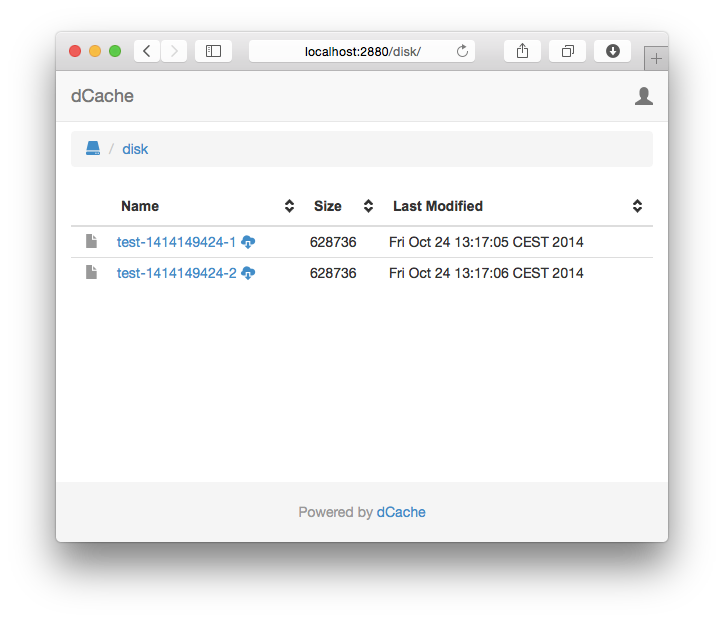

HTML styling

The default HTML rendering of directory listings and error messages has been updated to use the Bootstrap framework, which supports a rich set of features. This new default provides a deliberately toned-down presentation. The default page now contains two links for each file: one that hints the browser should show the content and the other that the browser should download the file. As before, admins may customize the rendering of directory listings and error messages. To support clients with poor or no Internet connection, all required JavaScript libraries and Bootstrap files for the default layout are included with dCache.

WLCG federated HTTP

Support for WLCG federated HTTP transfers has been added. This allows the webdav door to redirect a client to another replica if a local copy was not found. Support for this relies on special URLs generated by a central catalogue service. For all other URLs the behavior is unchanged.

Third-party transfers

dCache v2.10 sees greatly improved support for HTTP third-party transfers. These are transfers between dCache and some other HTTP server, so that the traffic does not go through the client.

In keeping with gsiftp, http third-party transfers are initiated on the pool. dCache supports both pull and push transfers. A pull transfer is where the pool fetches a file from the remote server using the HTTP GET method. A push request is where the pool uploads a file to the remote server using the HTTP PUT method.

A transfer can use either an unencrypted transport (http://...) or

make use of SSL/TLS encryption (https://...). If an SSL/TLS

transport is used then the pool can use an X.509 credential when

establishing the SSL/TLS connection.

Both transfer directions (push and pull) will attempt to check the integrity of the transferred data. For pull requests, all data integrity information comes from the headers supplied in the GET response. For push requests, the pool will make a subsequent HEAD request and use the supplied information to verify the data was sent correctly.

For push requests, if a PUT request is successful but the subsequent HEAD request reveals data corruption then the pool will attempt to delete the file with an HTTP DELETE request. If this DELETE fails then the error message is updated accordingly. For pull requests, no additional cleanup steps are needed.

File checksum values are discovered via the RFC 3230 extension to HTTP. Unfortunately, this is not widely supported by HTTP servers; therefore, dCache supports two levels of data integrity checking: weak and strong. Weak data integrity is satisfied when

the remote service and the dCache server agree on the content length,

none of the checksums supplied by the remote server (if any) disagree with the checksums known by dCache.

Strong data integrity is satisfied when, in addition to satisfying the weak conditions:

- there is at least one checksum supplied by the remote server that agrees with a dCache-local checksum.

Weak data integrity can be satisfied by any HTTP server whereas strong data integrity requires an HTTP server with RFC 3230 support. A transfer satisfying weak integrity might not check that the checksum values match; strong integrity requires that checksums exist and match.

Third-party HTTP transfers also allow the client to specify zero or more (arbitrary) headers when requesting a file to be transferred. This allows the client to customize the HTTP request the pool sends to the remote server. Such headers could include authorization information or some other information to steer how the remote server handles the HTTP request.

Third-party transfers may be initiated by the webdav service and the

srm service. See the following sections on those services

for details on how to trigger third-party copying.

HTTP third-party transfers using WebDAV COPY

The webdav door supports requesting third-party file

transfers. This is an extension to the WebDAV COPY command, which is

normally limited to internal copies. A client may request a file be

transferred to a remote site by specifying the remote location as the

Destination HTTP request header. Currently supported transports are

GridFTP (destination URI starts gsiftp://) and HTTP. For HTTP both

plain (http://) and with SSL/TLS (https://) are supported.

The Credential HTTP request header may be used by the client to

describe whether or not the pool should use a delegated credential

when transferring the file. The accepted values are none and

gridsite. If none then no credential is to be used; if gridsite

then a delegated credential is to be used. If the Credential header

isn’t specified then a transport-specific default is used: for gsiftp

and https transports the default is gridsite; for http transport the

default is none. Some combinations are not supported: gsiftp with

none and http with gridsite are not supported.

If a delegated credential is to be used, the client must delegate a

credential to dCache using the delegation service, which is part of

the srm service. If no useful certificate could be found, the webdav

door will redirect the client to itself and include the

X-Delegate-To HTTP header in its response. This header contains a

space-separated list of delegation endpoints.

For HTTP and HTTPS transfers, the client may choose whether to

require weak or strong verification; see above for a definition of

these. The RequireChecksumVerification header controls

this behavior; if this header has a value true, strong

verification is required. If false, weak verification is

sufficient. If the header is not specified, a configurable default

value is used.

Again, for HTTP and HTTPS transfers, the client may specify

additional or replacement HTTP headers that the pool should use when

making the transfer. These are specified as headers that start

TransferHeader. This prefix is removed and the header used by the

pool. For example, to have the pool use basic authentication with

userid ‘Aladdin’ and password ‘open sesame’, the client would add the

following header to its request:

TransferHeaderAuthentication: Basic QWxhZGRpbjpvcGVuIHNlc2FtZQ==

Once the transfer is accepted, the client will receive periodic progress information. If the client closes the TCP connection, the transfer is canceled.

> curl -L -E "Gerd Behrmann" -k -X COPY -H Destination:https://localhost:2881/disk/foobar https://127.0.0.1:2881/disk/test-1414149424-1

<html>

<head>

<meta http-equiv="Content-Type" content="text/html;charset=ISO-8859-1"/>

<title>Error 401 </title>

</head>

<body>

<h2>HTTP ERROR: 401</h2>

<p>Problem accessing /disk/test-1414149424-1. Reason:

<pre> client failed to delegate a credential</pre></p>

<hr /><i><small>Powered by Jetty://</small></i>

</body>

</html>

> delegation

Type 'help' for help on commands.

Type 'exit' or Ctrl+D to exit.

$ endpoint srm://localhost

[srm://localhost] $ delegate

Generated certificate expire Fri Oct 24 22:10:37 CEST 2014

Delegated credential has id 6BBBD539A5D5206861EEB1C0D9123C9FDA94E32A

> curl -L -E "Gerd Behrmann" -k -X COPY -H Destination:https://localhost:2882/disk/foobar https://127.0.0.1:2882/disk/test-1414149424-1

Perf Marker

Timestamp: 1414153228

State: 1

State description: querying file metadata

Stripe Index: 0

Total Stripe Count: 1

End

success: Created

HTTP third-party transfers using SRM

The srm service supports http and https transfers in

both pull (dCache pool makes an HTTP GET request) and pull (pool makes

an HTTP PUT request) operations. The SRM client can steer the transfer

by supplying ExtraInfo arguments. The ExtraInfo arguments are a set of

arbitrary key-value pairs that the client can specify when making the

request. The currently supported ExtraInfo keys are:

verifiedIf set, takes a value of eithertrueorfalse. This controls whether weak or strong verification is required. If set to false then weakly verified transfers are successful. If set to true then a transfer must be strongly verified to be successful.header-. All ExtraInfo elements that startheader-are converted into HTTP headers. The HTTP header key is the ExtraInfo key without the initialheader-. The HTTP header value is the ExtraInfo value. These HTTP headers are used when the pool makes a request.

If the SRM client delegates a credential as part of the GSI connection, this credential is used to authenticate when the pool makes an SSL/TLS-based connection to the remote server. If the client doesn’t delegate then dCache will attempt the transfer without any client credential.

$ srmcp -delegate srm://127.0.0.1/disk/test-1414149424-1 https://localhost:2882/disk/foobar2 $ srmls srm://localhost/disk/foobar2 628736 /disk/foobar2

SRM

The srm service has been heavily refactored, providing

improved persistence, standard compliance, logging, and robustness.

Persistence

Persistence of SRM requests to the database has been improved. The

consequence is that SRM database throughput should be much higher than

in earlier versions. Loading requests back from the database during

startup is faster, and the risk of database queue overflows should be

reduced. In particular when request history persistence is enabled, the

srm performs better than in earlier versions. We

recommend enabling request history persistence, as it makes it easier

to debug transfer issues.

Bring-online requests, as well as get and put requests for which a

transfer URL (TURL) was already prepared now survive an srm

restart.

Absolute FTP TURLs

The SRM protocol allows the client to negotiate a transfer URL (TURL) for uploading or downloading a file. URLs for FTP transfers are defined in RFC 1738. According to the standard, they are to be interpreted relative to the user’s login directory (home directory) and not relative to the root directory.

The srm service in previous versions of dCache

generated TURLs relative to the root directory. This will not work as

expected for RFC 1738-compliant clients if the user has a home

directory other than /.

This problem has now been fixed and the returned TURL will work for

users with a non-root home directory. Unfortunately neither JGlobus

nor Globus interpret RFC 1738 correctly. As a workaround we now

generate TURLs with an extra leading / in the path. This

provides the correct behavior with FTP clients typically used for SRM,

but would fail if a strictly standard compliant client would be

used.

Protocol negotiation

When negotiating a TURL, the srm service must choose

from a list of protocols provided by the client. dCache will use the

list of doors registered with a loginbroker to determine

the supported protocols.

loginbroker now supports registering the root

directory of doors. As a consequence, srm no longer needs

to be configured with the root paths of each door and the

properties srm.service.webdav.root and

srm.service.xrootd.root are obsolete.

If doors are configured to use different root directories, not all doors may be able to serve a file. This is now taken into account when choosing the transfer protocol and door to use.

Unique write TURLs

In earlier versions, the dCache srm door has acted as

a redirector for regular dCache doors. This means that TURLs generated

by the srm service have been the same URLs you would have

used to access those files without SRM.

This has changed and for uploads each TURL is now unique. The

uploaded file remains invisible to users using non-SRM protocols until

the upload is committed by the SRM client

calling srmPutDone.

SRM upload requests have a finite lifetime; if the SRM client does

not call srmPutDone in time, the request will

timeout. Should this happen, any file uploaded using the corresponding

TURL will be deleted and any subsequent attempt to upload to the TURL

will fail.

There are many reasons for making this change. To name a few:

- The expected file size, access latency and retention policy provided

by the SRM client could not be supported without enabling the

spacemanagerservice. These parameters are now respected even without space management. - Failure recovery was difficult, as dCache could never be certain whether a file uploaded through a door happened as part of the SRM upload or was done by another non-SRM client that incidentally uploaded a file to the same path at the same time.

- If an SRM upload was aborted and retried, we could not prevent the first client from completing the second upload as we always generated the same TURL. Thus we couldn’t properly isolate the two uploads from each other.

- Since the only way to bind file size, space token, access latency

and retention policy to a file before upload was through the

spacemanagerservice, space reservations were bound to paths. Such reservations would prevent thesrmfrom creating concurrent uploads to the same SURL, but to work correctly, the two services had to agree on the state. Wherever there is distributed state, there is a risk that the state is inconsistent. This problem was at the heart of the “Already have 1 record(s)” errors.

To generate unique write TURLs, the srm creates a

unique upload directory for each upload. After the transfer completes,

the file is moved to the target path and the upload directory is

deleted. Parameters like expected file size, access latency, retention

policy and space token are bound to the directory using Chimera

directory tags.

In rare cases, typically if dCache components are restarted or get disconnected, upload directories may be orphaned. Currently, the administrator has to manually delete such directories.

By default, these temporary upload directories are created under the

/upload directory. Chimera automatically creates this

directory the first time it is needed. Ownership and permissions are

set such that nobody can list the directory and thus nobody can

discover the temporary upload directories.

IMPORTANT If the transfer

doors used for srm do no export the

/upload directory the upload will fail. In the following

we describe several solutions to this problem, in order of

preference.

Define an explicit FTP root

By default, FTP doors only expose the root directory of the account of the user. If accounts have different root directories, one cannot define a common directory visible through FTP for all users. One solution is to change the behavior of the FTP doors to expose a fixed directory as their root. The FTP door would still only allow access to files under the root directory of the account, or within the upload directory.

[domain] [domain/pnfsmanager] [domain/ftp] ftp.authn.protocol = gsi ftp.root = / [domain/srm]

Define a different upload directory

In case doors already expose a common root directory that just

happens to be different from /, the solution is to alter

the dcache.upload-directory to a path that is exported by

all doors. A typical site value would

be /pnfs/example.org/data/upload. This property has to be

set for pnfsmanger and all doors used for SRM

transfers.

dcache.upload-directory = /pnfs/example.org/data/upload [domain] [domain/pnfsmanager] [domain/ftp] ftp.authn.protocol = gsi ftp.root = /pnfs/example.org/data [domain/webdav] webdav.authn.protocol = https-jglobus webdav.root = /pnfs/example.org/data [domain/srm] srm.root = /pnfs/example.org/data

Define extra doors for SRM to use

In the case that you cannot find a directory visible through all

doors used by the srm, you can introduce special door

instances only used for SRM transfers. Such doors can be configured to

only export the upload base directory. This approach works both when

the different root paths are defined per door and per user.

[domain]

[domain/pnfsmanager]

[domain/ftp]

ftp.authn.protocol = gsi

ftp.root = /pnfs/example.org/data/foo

[domain/ftp]

ftp.cell.name = FTP-SRM-${host.name}

ftp.net.port = 2812

ftp.authn.protocol = gsi

ftp.root = ${dcache.upload-directory}

[domain/srm]

This duplication is only necessary for protocols that do not expose the upload directory already (typically FTP).

Define per user upload directories

The final solution is to specify a relative upload path, in which case it is interpreted to be relative to the account root directory. Assuming that the users' root directory are exposed through all doors, these relative directories will all be exposed too.

dcache.upload-directory = upload [domain] [domain/pnfsmanager] [domain/ftp] ftp.authn.protocol = gsi [domain/webdav] webdav.authn.protocol = https-jglobus [domain/srm]

An account with root

directory /pnfs/example.org/data/foobar would then use

the upload

directory /pnfs/example.org/data/foobar/upload. The

downsides of this approach are:

- the upload directories may clash with existing directories;

- the user may alter the permissions of the upload directory, thus bypassing the protection that unique TURLs were supposed to introduce; and

- the upload directories are created in many different places, making it more difficult to clean orphaned upload directories.

Scheduling

dCache v2.10 sees a major overhaul in how the srm

controls and limits its interaction with other dCache services. The

following sections describe these changes.

Background

The SRM protocol has the concept of a client’s request not being processed immediately, with the request being queued prior to any work being conducted. This is most apparent for asynchronous requests, where the client reconnects periodically to discover what progress, if any, has taken place. The following also applies for synchronous requests (where the client waits to learn the result of the query); however, the client is oblivious of these details.

In general, a successful request will start out in a queued state. After dCache starts processing the request, the request will have an in-progress state. For transfer requests (GET or PUT), the request will be in a ready state when there is a TURL for the client to use, and in a success state after a successful transfer. For non-transfer requests (LS, BRING-ONLINE, …) the request will go into the success state once dCache finishes processing it, skipping the ready state.

To answer most client-issued requests, the srm service must trigger some activity in other dCache services. For the most part, the srm service simply issues requests to other services, collects the responses and converts them into a corresponding SRM reply.

The srm service has several execution pools to

throttle processing of requests. These limits are applied to

individual files, so, for example, from a single request to read 10

files, each of the files is scheduled as if there were 10 requests,

each reading a single file.

Prior to dCache v2.10, the srm throttled itself by

limiting the number of requests it can process concurrently. Once this

limit is reached, additional requests are placed in a queue with a

limited capacity. Once this queue limit is reached, subsequent

requests are rejected. The effect is to throttle the frequency of

messages being sent.

In practice, a single thread could easily saturate the rest of

dCache, which meant that this was not a particularly effective way to

throttle the srm service.

Furthermore, the queue limits would also affect requests that had already begun processing. Thus under high load, one could observe requests being failed even if they had already started processing. At least in theory, this could lead to a starvation problem, in which a lot of processing could take place while no requests would ever succeed.

New approach

Rather than rate-limiting the delivery of internal messages, dCache v2.10 provides the ability to limit the number of requests in each of the different states mentioned above (“queued”, “in-progress” and “ready”). This is taking into account “in flight” requests.

There are now three types of limits: max-requests, max-inprogress and max-transfers.

- max-requests

-

Controls the total number of requests that are queued or being actively worked on. Once this limit is reached, any more requests from an SRM client are rejected. The limit allows the

srmservice to protect against running out of memory.Setting a value too low will result in the srm not fully utilizing the available memory; setting it too high will risk running out of memory under heavy load.

- max-inprogress

-

Controls the concurrent activity within dCache; once this limit is reached, subsequent requests are either queued or rejected, depending on the max-requests limit. This allows the srm service to stop itself from overloading core dCache services when processing SRM requests. The max-inprogress limit also controls the maximum number of staging requests for GET and BRING-ONLINE requests. For COPY requests, it sets the maximum number of current transfers.

Setting the value too low will result in the srm artificially limiting its performance, as requests will needlessly spend time queued. Setting the value too high will risk overloading core components when the srm service is under heavy load.

- max-transfers

-

Controls the number of TURLs handed out to clients. Once this limit is reached, the srm keeps subsequent requests that have a TURL in the in-progress state - at least from the point of view of the client. Internally, such requests are in the ready queued state and no longer count towards the max-inprogress limit. Once the SRM client completes transfers, those requests that have a TURL but are in the ready queued state can become ready. This limit is mostly to allow dCache to protect pools by limiting the number of concurrent transfers. It will also provide some protection for the transfer doors (typically

ftp) andpoolmanager.Since this limit type affects only transfers, setting the value too low will result in artificially poor transfer rates (with client requests spending a large amount of time in the in-progress state despite having a TURL) while other SRM requests are processed quickly. Setting the value too high risks overloading pools when dCache is under heavy load.

There are request-specific properties that may be configured for each

of these three types of limit. These have names like

srm.request.TYPE.{max-requests,max-inprogress,max-transfers},

where TYPE is one of get, put,

ls, bring-online, copy or

reserve-space.

For example:

srm.request.bring-online.max-inprogresscontrols the maximum number of concurrent staging requests.srm.request.get.max-transferscontrols the maximum number of concurrent downloads (maximum number of TURLs handed out at any time).srm.request.ls.max-requestscontrols how many file metadata and directory listing queries to allow (either actively being processed or queued) before rejecting new requests.

The max-requests limit properties (srm.request.get.max-requests,

srm.requests.put.max-requests, etc) all take a common default value

controlled by the srm.request.max-requests property. The current

default value for srm.request.max-requests is 10,000. Note that the

srm.request.max-requests value applies to each SRM request type

(GET, PUT, BRING-ONLINE, …) independently. Therefore, the overall

maximum number of requests is six times the configured value.

The max-inprogress properties (srm.request.get.max-inprogress,

srm.request.put.max-inprogress, etc.) all take individual default

values. The current default values are to allow concurrent processing

of 10,000 BRING-ONLINE requests, 1,000 GET and COPY requests, 50 PUT

requests, 50 LS requests, and 10 RESERVE-SPACE requests.

The two max-transfers properties (srm.request.get.max-transfers and

srm.request.put.max-transfers) take pre–2.10 srm configuration

properties as default values.

As with prior versions of dCache, if a request suffers a transitory failure, the srm will retry the operation after waiting awhile. Prior to 2.10, such retries were treated specially. With 2.10, the retry is treated as if it were a fresh request, thus is subject to the three limits described above.

Monitoring

Info

The info service, which collects and collates information from various dCache components, now supports presenting the gathered data in different formats. Previous versions of dCache only supported a dCache-specific XML format. With dCache v2.10 two additional formats are available.

There are two ways the client can choose the format. The HTTP

client can specify the MIME type as the Accept request

header; JSON-specific clients may do this automatically. The

alternative is to append ?format=_some_format_ to the

URI. There are four supported MIME types

(application/xml,

text/xml, application/json and

text/x-ascii-art) and three supported formats for

?format= (xml, json

and pretty). The default format continues to be XML.

Additional attributes have been added to

the domain/<domain-name>/static node, including

information on the OS and the JVM.

$ curl -H Accept:application/json http://127.0.0.1:2288/info/domains/dCacheDomain/static

{

"java": {

"vendor": "Oracle Corporation",

"vm": {

"vendor": "Oracle Corporation",

"name": "Java HotSpot(TM) 64-Bit Server VM",

"version": "25.20-b23",

"specification-version": "1.8",

"info": "mixed mode"

},

"class-version": "52.0",

"runtime-version": "1.8.0_20-b26",

"version": "1.8.0_20",

"specification-version": "1.8"

},

"os": {

"name": "Mac OS X",

"arch": "x86_64",

"version": "10.10"

},

"user": {

"country": "US",

"timezone": "Europe/Copenhagen",

"name": "behrmann",

"language": "en"

}

}

JMX