for 1.9.12-series (FHS layout)

Abstract

The dCache Book is the guide for administrators of dCache systems. The first part describes the installation of a simple single-host dCache instance. The second part describes the components of dCache and in what ways they can be configured. This is the place for finding information about the role and functionality of components in dCache as needed by an administrator. The third part contains solutions for several problems and tasks which might occur during operating of a dCache system. Finally, the last two parts contain a glossary and a parameter and command reference.

Each release of dCache is available in two file layouts: FHS and opt. The FHS layout has files located according to the Filesystem Hierarchy Standard. Most modern distributions have adopted the FHS, so we recommend that fresh installations of dCache used this layout.

The opt layout places all the files in /opt/d-cache directory. This is

where previous versions of dCache located all the packaged

files. The opt layout is provided for backwards

compatibility and to satisfy those sites that cannot use the

FHS layout.

Table of Contents

- Preface

- I. Getting started

- II. Configuration of dCache

- 4. Chimera

- 5.

pnfs - 6. The Cell Package

- 7. The

replicaService (Replica Manager) - 8. The

poolmanagerService - 9. The dCache Tertiary Storage System Interface

- 10. File Hopping

- 11. dCache Partitioning

- 12. Authorization in dCache

- 13. dCache as xRootd-Server

- 14. dCache Storage Resource Manager

- 15. The

statisticsService - 16. dCache Webadmin-Interface

- 17. ACLs in dCache

- 18. GLUE Info Provider

- 19. Stage Protection

- III. Cookbook

- IV. Reference

List of Figures

- 1.1. The dCache Layer Model

- 7.1. Pool State Diagram

List of Tables

- 22.1. Protocol Overview

- 23.1. Protocol Overview

- 25.1. Open Timeout mechanisms

- 26.1. Property Overview

- 26.2. Property Overview

- 26.3. Property Overview

- 29.1.

List of Examples

- 17.1. ACL allowing specific user to delete files in a directory

- 17.2. ACL to deny a group

- 17.3. ACL to allow a user to delete all files and subdirectories

- 17.4. Obtain ACL information by absolute path

- 20.1. surveying the space tokens available in a directory.

- 20.2. Listing the space tokens for a

SRM: - 20.3. Using

srmls -l: - 20.4. Using

srmls -l: - 20.5. Limited directory listing

- 23.1. Batch file for two

GridFTPdoors serving separate network interfaces - 23.2. Batch file for two

GridFTPdoors serving separate network interfaces

Table of Contents

Welcome to the dCache. dCache is a distributed storage solution for storing huge amounts of data without a hard limit, used to provide storage in the petabyte range. Therefore it qualifies as the storage system supporting data intensive experiments.

dCache is a joined effort between the Deutsches Elektronen-Synchrotron (DESY) in Hamburg, Nordic Data Grid Facility (NDGF based in Copenhagen), the Fermi National Accelerator Laboratory near Chicago with significant distributions and support from the University of California, San Diego, INFN, Bari as well as Rutherford Appleton Laboratory, UK and CERN in Geneva.

dCache can use hierarchical storage management (e.g., hard disk and tape), provides mechanisms to automatically increase performance and balance loads, increase resilience and availability. It also supplies advanced control systems to manage data as well as data flows. Normal filesystem (btrfs, ext4, XFS, ZFS) is used to store data on storage nodes. There are several ways of accessing data stored in dCache:

NFS4.1 (Chimera)HTTPandWebDAVGridFTP(GSI-FTP)xrootd

SRM(versions 1.1 and 2.2)dCapandGSIdCap

dCache supports certificate based authentication through the Grid Security Infrastructure

used in GSI-FTP, GSIdCap transfer protocols and the SRM management protocol.

Certificate authentication is also available for HTTP and WebDAV.

dCache also supports fine-grain authorization with support for POSIX file permissions

and NFS-style access control lists.

Other features of dCache are:

Resilience and high availability can be implemented in different ways by having multiple replicas of the same files.

Easy migration of data via the migration module.

A powerful cost calculation system that allows to control the data flow (reading and writing from/to pools, between pools and also between pools and tape).

Load balancing and performance tuning by hot pool replication (via cost calculation and replicas created by pool-to-pool-transfers).

Space management and support for space tokens.

Garbage collection of replicas, depending on their flags, age, et cetera.

Detailed logging and debugging as well as accounting and statistics.

XML information provider with detailed live information about the cluster.

Scriptable adminstration interface with a terminal-based front-end.

Web-interface with live information of the most important information.

Ensuring data integrity through checksumming.

dCache / SRM can transparently manage data distributed among

dozens of disk storage nodes (sometimes distributed over several countries).

The system has shown to significantly improve the efficiency of connected tape storage

systems, by caching, gather and flush and scheduled staging techniques.

Furthermore, it optimizes the throughput to and from data clients by dynamically

replicating datasets on the detection of load hot spots. The system

is tolerant against failures of its data servers, which allows administrators

to deploy commodity disk storage components.

Access to the data is provided by various standard protocols. Furthermore

the software comes with an implementation of the Storage Resource Manager

protocol (SRM), which is an open standard for grid middleware

to communicate with site specific storage fabrics.

This book is primerally targeted at system administrators.

For minimal test installation:

Hardware: contemporary CPU , 1 GiB of RAM , 100 MiB free harddisk space

Software: Oracle/Sun Java, Postgres SQL Server

For a high performance Grid scenario the hardware requirements highly differ, which

makes it impossible to provide such parameters here. However, if you wish to setup a

dCache-based storage system, just let us know and we will help you with your system

specifications. Just contact us: <support@dcache.org>.

This book shall introduce you to dCache and provide you with the details of the installation. It describes configuration, customization of dCache as well as the usage of several protocols that dCache supports. Additionally, it provides cookbooks for standard tasks.

Here is an overview part by part:

Part 1, Getting started: This part introduces you to the cells and domain concept in dCache. It provides a detailed description of installing, the basic configuration, and upgrading dCache.

Part 2, Configuration of dCache: Within this part the configuration of several additional features of dCache is described. They are not necessary to run dCache but will be needed by some users depending on their requirements.

Part 3, Cookbook: This part comprises guides for specific tasks a system administrator might want to perform.

This part gets you all the help that you might need:

For acquiring resources:

The download page.

The YUM repositories.

For getting help during installation:

Developers

<support@dcache.org>Additional Support:

German support:

<german-support@dcache.org>UK support:

<GRIDPP-STORAGE@JISCMAIL.AC.UK>USA support:

<osg-storage@opensciencegrid.org>User Forum:

<user-forum@dcache.org>

For features that you would like to see in dCache or bugs that should be fixed: Just write an e-mail to

<support@dcache.org>If you like to stay up-to-date about new releases you can use the RSS feeds available from our downloads page.

For EMI releases of dCache please visit the EMI dCache download page.

This part is intended for people who are new to dCache. It gives an introduction to dCache, including how to configure a simple setup, and details some simple and routine administrative operations.

Table of Contents

dCache is a distributed storage solution. It organises storage across computers so the combined storage can be used without the end-users being aware of where their data is stored. They simply see a large amount of storage.

Because end-users do not need to know on which computer their data is stored, it can be migrated from one computer to another without any interruption of service. As a consequence, (new) servers may be added to or taken away from the dCache storage cluster at any time.

dCache supports requesting data from a tertiary storage system. Such systems typically store data on magnetic tapes instead of disks, which must be loaded and unloaded using a tape robot. The main reason for using tertiary storage is the better cost-efficiency, archiving a very large amount of data on rather inexpensive hardware. In turn the access latency for archived data is significantly higher.

dCache also supports many transfer protocols (allowing users to read and write to data). These have a modular deployment, allowing dCache to support expanded capacity by providing additional front-end machines.

Another performance feature of dCache is hot-spot data migration. In this process, dCache will detect when files are requested very often. If this happens, dCache can generate duplicates of the popular files on other computers. This allows the load to be spread across multiple machines, so increasing throughput.

The flow of data within dCache can also be carefully controlled. This is especially important for large sites as chaotic movement of data may lead to suboptimal usage. Instead, incoming and outgoing data can be marshaled so they use designated resources guaranteeing better throughput and improving end-user experience.

dCache provides a comprehensive administrative interface for configuring the dCache instance. This is described in the later sections of this book.

dCache, as distributed storage software, can provide a coherent service using multiple computers or nodes (the two terms are used interchangeable). Although dCache can provide a complete storage solution on a single computer, one of its strengths is the ability to scale by spreading the work over multiple nodes.

A cell is dCache’s most fundamental executable building block. Even a small dCache deployment will have many cells running. Each cell has a specific task to perform and most will interact with other cells to achieve it.

Cells can be grouped into common types; for example, pools, doors. Cells of the same type behave in a similar fashion and have higher-level behaviour (such as storing files, making files available). Later chapters will describe these different cell types and how they interact in more detail.

There are only a few cells where (at most) only a single instance is required. The majority of cells within a dCache instance can have multiple instances and dCache is designed to allow load-balancing over these cells.

A domain is a container for running cells. Each domain runs in its own Java Virtual Machine (JVM) instance, which it cannot share with any other domain. In essence, a domain is a JVM with the additional functionality necessary to run cells (such as system administration and inter-cell communication). This also implies, that a node’s resources, such as memory, available CPU and network bandwidth, are shared among several domains running on the same node.

dCache comes with a set of domain definitions, each specifying a useful set of cells to run within that domain to achieve a certain goal. These goals include storing data, providing a front-end to the storage, recording file names, and so on. The list of cells to run within these domains are recommended deployments: the vast majority of dCache deployments do not need to alter these lists.

A node is free to run multiple domains, provided there’s no conflicting requirement from the domains for exclusive access to hardware. A node may run a single domain; but, typically a node will run multiple domains. The choice of which domains to run on which nodes will depend on expected load of the dCache instance and on the available hardware. If this sounds daunting, don’t worry: starting and stopping a domain is easy and migrating a domain from one node to another is often as easy as stopping the domain on one node and starting it on another.

dCache is scalable storage software. This means that (in most cases) the performance of dCache can be improved by introducing new hardware. Depending on the performance issue, the new hardware may be used by hosting a domain migrated from a overloaded node, or by running an additional instance of a domain to allow load-balancing.

Most cells communicate in such a way that they don’t rely on in which domain they are running. This allows a site to move cells from one domain to another or to create new domain definitions with some subset of available cells. Although this is possible, it is rare that redefining domains or defining new domains is necessary. Starting or stopping domains is usually sufficient for managing load.

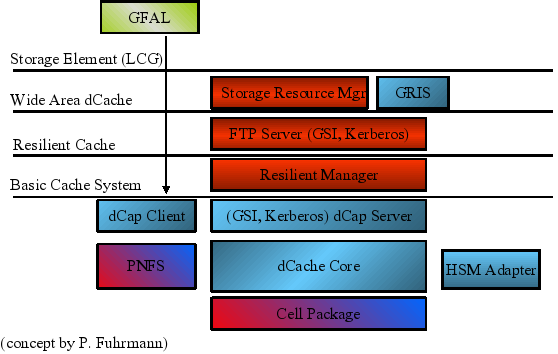

The layer model shown in Figure 1.1, “The dCache Layer Model” gives an overview of the architecture of the dCache system.

Table of Contents

The first section describes the installation of a fresh dCache instance using RPM files downloaded from the dCache home-page. It is followed by a guide to upgrading an existing installation. In both cases we assume standard requirements of a small to medium sized dCache instance without an attached tertiary storage system. The third section contains some pointers on extended features.

In the following the installation of a dCache

instance will be described. The Chimera name space provider,

some management components, and the SRM need a PostgreSQL server

installed. We recommend running this PostgreSQL on the local

node. The first section describes the configuration of a PostgreSQL

server. After that the installation of Chimera and of the

dCache components will follow. During the whole installation

process root access is required.

In order to install dCache the following requirements must be met:

An RPM-based Linux distribution is required for the following procedure. For Debian derived systems the RPM may be converted to a DEB using alien. Solaris is supported using either the Solaris package or the tarball.

dCache requires Java 1.6 JRE. Please use Sun JVM at the latest patch-level (at the moment of writing this is 1.6.0_25) and make sure to upgrade to the newest version frequently. It is recommendet to use JDK as dCache scripts can make use of some extra features that JDK provides to gather more diagnostic information (heap-dump, etc). This helps when tracking down bugs.

PostgreSQL must be installed and running. See the section called “Installing a PostgreSQL Server” for more details. PostgreSQL version 8.3 or later is required.

The RPM packages may be installed right away, for example using the command:

[root] #rpm -ivh dcache-server-<version>.noarch.rpm

The actual sources lie at http://www.dcache.org/downloads.shtml. To install for example Version 1.9.12-1 of the server you would use this:

[root] #rpm -ivh http://www.dcache.org/downloads/1.9/dcache-server-1.9.12-1.noarch.rpm

The client can be found in the download-section of the above url, too.

Using a PostgreSQL server with dCache places a number of requirements on the database. You must configure PostgreSQL for use by dCache and create the necessary PostgreSQL user accounts and database structure. This section describes how to do this.

Perhaps the simplest configuration is to allow password-less access to the database and the following documentation assumes this is so.

To allow local users to access PostgreSQL without requiring a

password, ensure the file

pg_hba.conf, usually located in

/var/lib/pgsql/data,

contains the following lines.

local all all trust host all all 127.0.0.1/32 trust host all all ::1/128 trust

Note

Please note it is also possible to run dCache with all PostgreSQL accounts requiring passwords. See the section called “Configuring Access to PostgreSQL” for more advice on the configuration of PostgreSQL.

Restarting PostgreSQL

If you have edited PostgreSQL configuration files, you must restart PostgreSQL for those changes to take effect. On many systems, this can be done with the following command:

[root] #/etc/init.d/postgresql restartStopping postgresql service: [ OK ] Starting postgresql service: [ OK ]

Chimera is a library providing a hierarchical name space

with associated meta data. Where pools in dCache store the

content of files, Chimera stores the names and meta data

of those files. Chimera itself stores the data in a

relational database. We will use PostgreSQL in this tutorial.

The properties of Chimera are defined in

/usr/share/dcache/defaults/chimera.properties.

See Chapter 4, Chimera for more information.

Create the Chimera user and database and add the Chimera-specific tables and stored procedures:

[root] #createdb -U postgres chimeraCREATE DATABASE[root] #createuser -U postgres --no-superuser --no-createrole --createdb --pwprompt chimeraEnter password for new role: Enter it again: CREATE ROLE You do not need to enter a password.[root] #psql -U chimera chimera -f /usr/share/dcache/chimera/sql/create.sqlpsql:/usr/share/dcache/chimera/sql/create.sql:23: NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "t_inodes_pkey" for table "t_inodes" CREATE TABLE psql:/usr/share/dcache/chimera/sql/create.sql:35: NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "t_dirs_pkey" for table "t_dirs" CREATE TABLE psql:/usr/share/dcache/chimera/sql/create.sql:45: NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "t_inodes_data_pkey" for table "t_inodes_data" many more like this... INSERT 0 1 many more like this... INSERT 0 1 CREATE INDEX CREATE INDEX psql:/usr/share/dcache/chimera/sql/create.sql:256: NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "t_storageinfo_pkey" for table "t_storageinfo" CREATE TABLE psql:/usr/share/dcache/chimera/sql/create.sql:263: NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "t_access_latency_pkey" for table "t_access_latency" CREATE TABLE psql:/usr/share/dcache/chimera/sql/create.sql:270: NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "t_retention_policy_pkey" for table "t_retention_policy" CREATE TABLE psql:/usr/share/dcache/chimera/sql/create.sql:295: NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "t_locationinfo_pkey" for table "t_locationinfo" CREATE TABLE psql:/usr/share/dcache/chimera/sql/create.sql:311: NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "t_locationinfo_trash_pkey" for table "t_locationinfo_trash" CREATE TABLE CREATE INDEX psql:/usr/share/dcache/chimera/sql/create.sql:332: NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "t_acl_pkey" for table "t_acl" CREATE TABLE CREATE INDEX[root] #createlang -U postgres plpgsql chimera[root] #psql -U chimera chimera -f /usr/share/dcache/chimera/sql/pgsql-procedures.sqlCREATE FUNCTION CREATE FUNCTION CREATE FUNCTION CREATE TRIGGER CREATE FUNCTION CREATE TRIGGER CREATE SEQUENCE CREATE FUNCTION CREATE TRIGGER

The dCache components will access the database server with the user srmdcache which can be created with the createuser; for example:

[root] #createuser -U postgres --no-superuser --no-createrole --createdb --pwprompt srmdcacheYou do not need to enter a password

Several management components running on the head node as

well as the SRM will use the database

dcache for storing their state

information:

[root] #createdb -U srmdcache dcache

There might be several of these on several hosts. Each is used by the dCache components running on the respective host.

Now the configuration of PostgreSQL is done.

In order to use the Admin Interface of dCache (see the section called “The Admin Interface”) you will need to generate ssh-keys.

[root] #ssh-keygen -b 768 -t rsa1 -f /etc/dcache/server_key -N ""Generating public/private rsa1 key pair. Your identification has been saved in /etc/dcache/server_key. Your public key has been saved in /etc/dcache/server_key.pub. The key fingerprint is: 33:ac:91:4c:21:4e:63:aa:2d:90:58:4d:72:e6:b5:88 root@example.org[root] #ssh-keygen -b 1024 -t rsa1 -f /etc/dcache/host_key -N ""Generating public/private rsa1 key pair. Your identification has been saved in /etc/dcache/host_key. Your public key has been saved in /etc/dcache/host_key.pub. The key fingerprint is: 75:a4:2b:24:cc:75:ad:b7:bd:8b:dd:1a:3e:96:3f:9d root@example.org

Now you can start dCache.

[root] #dcache startStarting dCacheDomain done

So far, no configuration is done, so only one predefined domain is started.

dCache consists of one or more domains. A domain in dCache is a Java Virtual Machine hosting one or more dCache cells. Each domain must have a name which is unique throughout the dCache instance and a cell must have a unique name within the domain hosting the cell.

A service is an abstraction used in the dCache configuration to describe atomic units to add to a domain. It is typically implemented through one or more cells. dCache keeps lists of the domains and the services that are to be run within these domains in the layout files. The layout file may contain domain- and service- specific configuration values. A pool is a cell providing physical data storage services.

In the setup of dCache, there are three main places for configuration files:

/usr/share/dcache/defaults/etc/dcache/dcache.conf/etc/dcache/layouts

The folder /usr/share/dcache/defaults

contains the default

settings of the dCache.

If one of the default configuration values needs to be changed, copy the default

setting of this value from one of the files in

/usr/share/dcache/defaults

to the file /etc/dcache/dcache.conf, which initially is

empty and update the value.

Note

In this first installation of dCache your dCache will not be connected to a tape sytem. Therefore please change the values for DefaultRetentionPolicy and DefaultAccessLatency in the file /etc/dcache/dcache.conf.

DefaultRetentionPolicy=REPLICA DefaultAccessLatency=ONLINE

Layouts describe which domains to run on a host and which services to run in each domain. For the customized configuration of your dCache you will create a mylayout.conf file in /etc/dcache/layouts.

Important

Do not update configuration values in the files in the defaults folder, since changes to these files will be overwritten by updates.

As the files in /usr/share/dcache/defaults/ do serve

as succinct documentation for all available configuration parameters and their

default values it is quite useful to have a look at them.

Domains and services are defined in the layout files. Depending on your site, you may have requirements upon the doors that you want to configure and domains within which you want to organise them.

A domain must be defined if services are to run in that domain. Services will be started in the order in which they are defined.

Every domain is a Java Virtual Machine that can be started and stopped separately. You might want to define several domains for the different services depending on the necessity of restarting the services separately.

The layout files define which domains to start and which services to put in which domain. Configuration can be done per domain and per service.

A name in square brackets, without a

forward-slash (/) defines a domain. A

name in square brackets with a forward

slash defines a service that is to run in a domain. Lines

starting with a hash-symbol (#) are

comments and will be ignored by dCache.

There may be several layout files in the layout directory, but only one

of them is read by dCache when starting up. By default it is the

single.conf.

If the dCache should be started with another layout file you will have to make

this configuration in /etc/dcache/dcache.conf.

Example:

dcache.layout=mylayoutThis entry in

/etc/dcache/dcache.conf

will instruct dCache to read the layout file

/etc/dcache/layouts/mylayout.conf when starting up.

Example:

/etc/dcache/layouts/single.conf:

broker.scheme=none [dCacheDomain] [dCacheDomain/admin] [dCacheDomain/broadcast] [dCacheDomain/poolmanager]

[ defines a domain

called dCacheDomain]dCacheDomain. In this example only one domain is defined.

All the services are running in that domain. Therefore

no messagebroker is needed, which is the meaning of the entry

messageBroker=none.

[ declares that

the dCacheDomain/admin]admin service is to be run in the dCacheDomain domain.

Example:

Here is an example for the first lines of the mylayout.conf

of a single node dCache with several domains.

[dCacheDomain] [dCacheDomain/poolmanager] [dCacheDomain/dummy-prestager] [dCacheDomain/broadcast] [dCacheDomain/loginbroker] #[dCacheDomain/topo] [namespaceDomain] [namespaceDomain/pnfsmanager] [namespaceDomain/cleaner] [namespaceDomain/acl] [namespaceDomain/nfsv3] [gPlazmaDomain] [gPlazmaDomain/gplazma] [srmDomain] [srmDomain/srm] [srmDomain/spacemanager] [srmDomain/transfermanagers]

Important

Having defined more than one domain, a messagebroker is needed. This is because the defined domains need to be able to communicate with each other. The default value ismessageBroker=cells, as defined in the defaults

/usr/share/dcache/defaults/dcache.properties.

dCache will need to write the files it keeps in pools. These pools are defined as services within dCache. Hence, they are added to the layout file of your dCache instance, like all other services.

The best way to create a pool, is to use the dcache script and restart the domain the pool runs in. The pool will be added to your layout file.

[<domainname>/pool]

name=<poolname>

path=/path/to/pool

waitForFiles=${path}/data

The property waitForFiles instructs the

pool not to start up until the specified file or directory

is available. This prevents problems should the underlying storage be unavailable (e.g., if a

RAID device is offline).

Note

Please restart dCache if your pool is created in a domain that did not exist before.

Example:

[root] #dcache pool create /srv/dcache/p1 pool1 poolDomainCreated a pool in /srv/dcache/p1. The pool was added to poolDomain in file:/etc/dcache/layouts/mylayout.conf.

In this example we create a pool called pool1 in the directory /srv/dcache/p1.

The created pool will be running in the domain poolDomain.

Now you need to change the ownership of the directories where your data is to be stored to the user running dCache which by default is dcache.

[root] #chown -R dcache /srv/dcache/p1

Mind the Gap!

The default gap for poolsizes is 4GiB. This means you should make a bigger pool than 4GiB otherwise you would have to change this gap in the dCache admin tool. See the example below. See also the section called “The Admin Interface”.

(local) admin >cd <poolname>(<poolname>) admin >set gap 2G(<poolname>) admin >save

Adding a pool to a configuration does not modify the pool or the data in it and can thus safely be undone or repeated.

By default the Java heap size and the maximum direct buffer size are defined as

dcache.java.memory.heap=512m dcache.java.memory.direct=512m

Again, these values can be changed in

/etc/dcache/dcache.conf.

For optimization of your dCache you can define the Java heap size in the layout file separately for every domain.

Example:

[dCacheDomain] dcache.java.memory.heap=2048m dcache.java.memory.direct=0m ... [utilityDomain] dcache.java.memory.heap=384m dcache.java.memory.direct=16m

Note

dCache uses Java to parse the configuration files and

will search for Java on the system path first; if it is

found there, no further action is needed. If Java is not

on the system path, the environment variable

JAVA_HOME defines the location of the

Java installation directory. Alternatively, the

environment variable JAVA can be used to

point to the Java executable directly.

If JAVA_HOME or JAVA cannot

be defined as global environment variables in the

operating system, then they can be defined in either

/etc/default/dcache or

/etc/dcache.env. These two files are

sourced by the init script and allow

JAVA_HOME, JAVA and

DCACHE_HOME to be defined.

All configured components can now be started with

dcache start.

Example:

[root] #dcache startStarting dCacheDomain Done (pid=7574) Starting namespaceDomain Done (pid=7647) Starting gPlazmaDomain Done (pid=8002) Starting srmDomain Done (pid=8081)

Now you can have a look at your dCache via The Web

Interface, see the section called “The Web Interface for Monitoring dCache”:

http://<httpd.example.org>:2288/,

where <httpd.example.org> is the node on which your

httpd service is running.

For a single node dCache this is the machine on which your dCache is running.

For security reasons you might want to run dCache as a

non-root user. This needs to be configured in

/etc/dcache/dcache.conf and the

ownership of the pools and the billing directories need to

be changed.

Example:

In this example we explain how to configure dCache to

run as user dcache.

First of all create the user dcache and a new

directory /var/log/dcache, owned by

dcache

[root] #useradd dcache[root] #mkdir /var/log/dcache[root] #chown dcache /var/log/dcache

and add two lines to

/etc/dcache/dcache.conf.

dcache.user=dcache dcache.paths.billing=/var/log/dcache

Change the ownership of the files

/etc/grid-security/hostcert.pem and

/etc/grid-security/hostkey.pem

[root] #chown dcache /etc/grid-security/hostcert.pem[root] #chown dcache /etc/grid-security/hostkey.pem

In the layout file

/etc/dcache/layouts/mylayout.conf

a pool was defined.

[${host.name}Domain/pool]

name=pool1

path=/path/to/pool1

maxDiskSpace=2TChange the ownership of the directory of the pool.

[root] #chown -R dcache /path/to/pool1

Moreover, if dCache has been running as root the

files /tmp/createDDL.jdbc and

/tmp/dropDDL.jdbc need to be removed

as they are owned by the user root.

[root] #rm -f /tmp/createDDL.jdbc /tmp/dropDDL.jdbc

Important

If you switched the portmap daemon off the domain in which

the NFS server is running needs to be running as

root. This needs to be set in the layout file.

[namespaceDomain] dcache.user=root [namespaceDomain/pnfsmanager] [namespaceDomain/cleaner] [namespaceDomain/acl] [namespaceDomain/nfsv3]

Now you can start dCache as user dcache

[root] #dcache startStarting dCacheDomain done Starting namespaceDomain done Starting gPlazmaDomain done Starting srmDomain done

and verifiy that dCache is running as dcache and

the NFS server is running as root.

[root] #dcache statusDOMAIN STATUS PID USER dCacheDomain running 11040 dcache namespaceDomain running 11117 root gPlazmaDomain running 11125 dcache srmDomain running 11182 dcache

Installing dCache on several nodes is not much more

complicated than installing it on a single node. Think about

how dCache should be organised regarding services and

domains. Then adapt the layout files, as described in the section called “Defining domains and services”, to the layout that you have in

mind. The files

/etc/dcache/layouts/head.conf and

/etc/dcache/layouts/pool.conf contain

examples for a dCache head-node and a dCache pool

respectively.

Important

You must configure a domain called dCacheDomain but the

other domain names can be chosen freely.

Please make sure that the domain names that you choose are unique. Having the same domain names in different layout files on different nodes may result in an error.

On any other nodes than the head node, the property

broker.host has to be added to the file

/etc/dcache/dcache.conf.

broker.host should point to the host running

your dCache broker. Usually that is the host containing the

special domain dCacheDomain, because that domain acts

implicitly as a broker.

Tip

On dCache nodes running only pool services you do not need to install PostgreSQL. If your current node hosts only these services, the installation of PostgreSQL can be skipped.

Important

Always read the release notes carefully before upgrading!

Upgrading to bugfix releases within one supported branch (e.g. from 1.9.12-1 to 1.9.12-2) may be done by upgrading the packages with

[root] #rpm -Uvh <packageName>

Now dCache needs to be started again.

Please use The Ultimate Golden Release Upgrade Guide I to upgrade from 1.9.5 to 1.9.12.

Table of Contents

This section is a guide for exploring a newly installed dCache system. The confidence obtained by this exploration will prove very helpful when encountering problems in the running system. This forms the basis for the more detailed stuff in the later parts of this book. The starting point is a fresh installation according to the the section called “Installing a dCache instance”.

Reading and writing data to and from a dCache instance can be

done with a number of protocols. After a standard installation,

these protocols are dCap, GSIdCap, and GridFTP. In

addition dCache comes with an implementation of the SRM

protocol which negotiates the actual data transfer protocol.

Create the root of the Chimera namespace and a world-writable directory by

[root] #/usr/bin/chimera-cli mkdir /data[root] #/usr/bin/chimera-cli mkdir /data/world-writable[root] #/usr/bin/chimera-cli chmod /data/world-writable 777

To use WebDAV you need to define a WebDAV service in

your layout file. You can define this service in an extra

domain, e.g. [webdavDomain] or add it to

another domain.

[webdavDomain] [webdavDomain/webdav] webdavAnonymousAccess=FULL

to the file /etc/dcache/layouts/mylayout.conf.

Note

Depending on the client you might need to set

webdav.redirect.on-read=false and/or

webdav.redirect.on-write=false.

# ---- Whether to redirect GET requests to a pool # # If true, WebDAV doors will respond with a 302 redirect pointing to # a pool holding the file. This requires that a pool can accept # incoming TCP connections and that the client follows the # redirect. If false, data is relayed through the door. The door # will establish a TCP connection to the pool. # (one-of?true|false)webdav.redirect.on-read=true # ---- Whether to redirect PUT requests to a pool # # If true, WebDAV doors will respond with a 307 redirect pointing to # a pool to which to upload the file. This requires that a pool can # accept incoming TCP connections and that the client follows the # redirect. If false, data is relayed through the door. The door # will establish a TCP connection to the pool. Only clients that send # a Expect: 100-Continue header will be redirected - other requests # will always be proxied through the door. # (one-of?true|false)webdav.redirect.on-write=true

Now you can start the WebDAV domain

[root] #dcache start webdavDomain

and access your files via

http://<webdav-door.example.org>:2880

with your browser.

You can connect the webdav server to your file manager and copy a file into your dCache.

To use curl to copy a file into your dCache you will need

to set webdav.redirect.on-write=false.

Example:

Write the file test.txt

[root] #curl -T test.txt http://webdav-door.example.org:2880/data/world-writable/curl-testfile.txt

and read it

[root] #curl http://webdav-door.example.org:2880/data/world-writable/curl-testfile.txt

dCache can also be used with a mounted file system.

Before mounting the name space you need to edit the

/etc/exports file. Add the lines

/ localhost(rw) /data

stop the portmapper

[root] #/etc/init.d/portmap stopStopping portmap: portmap

and restart dCache.

[root] #dcache restart

Now you can mount Chimera.

[root] #mount localhost:/ /mnt

With the root of the namespace mounted you can establish

wormhole files so dCap clients can discover the dCap

doors.

[root] #mkdir /mnt/admin/etc/config/dCache[root] #touch /mnt/admin/etc/config/dCache/dcache.conf[root] #touch /mnt/admin/etc/config/dCache/'.(fset)(dcache.conf)(io)(on)'[root] #echo "<dcache.example.org>:22125" > /mnt/admin/etc/config/dCache/dcache.conf

Create the directory in which the users are going to store their data and change to this directory.

[root] #mkdir -p /mnt/data[root] #cd /mnt/data

To be able to use dCap you need to have the dCap door

running in a domain.

Example:

[dCacheDomain] [dCacheDomain/dcap]

For this tutorial install dCap on your worker node. This

can be the machine where your dCache is running.

Get the gLite repository (which contains dCap) and

install dCap using yum.

[root] #cd /etc/yum.repos.d/[root] #wget http://grid-deployment.web.cern.ch/grid-deployment/glite/repos/3.2/glite-UI.repo[root] #yum install dcap

Create the root of the Chimera namespace and a

world-writable directory for dCap to write into by

[root] #/usr/bin/chimera-cli mkdir /data[root] #/usr/bin/chimera-cli mkdir /data/world-writable[root] #/usr/bin/chimera-cli chmod /data/world-writable 777

Copy the data (here /bin/sh is used as

example data) using the dccp command and the dCap

protocol describing the location of the file using a URL,

where <dcache.example.org> is

the host on which the dCache is

running

[root] #dccp -H /bin/sh dcap://<dcache.example.org>/data/world-writable/my-test-file-1[##########################################################################################] 100% 718 kiB 735004 bytes (718 kiB) in 0 seconds

and copy the file back.

[root] #dccp -H dcap://<dcache.example.org>/data/world-writable/my-test-file-1 /tmp/mytestfile1[##########################################################################################] 100% 718 kiB 735004 bytes (718 kiB) in 0 seconds

To remove the file you will need to mount the namespace.

dCap can also be used with a mounted file system.

Before mounting the name space you need to edit the

/etc/exports file. Add the lines

/ localhost(rw) /data

stop the portmapper

[root] #/etc/init.d/portmap stopStopping portmap: portmap

and restart dCache.

[root] #dcache restart

Now you can mount Chimera.

[root] #mount localhost:/ /mnt

With the root of the namespace mounted you can establish

wormhole files so dCap clients can discover the dCap

doors.

[root] #mkdir /mnt/admin/etc/config/dCache[root] #touch /mnt/admin/etc/config/dCache/dcache.conf[root] #touch /mnt/admin/etc/config/dCache/'.(fset)(dcache.conf)(io)(on)'[root] #echo "<dcache.example.org>:22125" > /mnt/admin/etc/config/dCache/dcache.conf

Create the directory in which the users are going to store their data and change to this directory.

[root] #mkdir -p /mnt/data[root] #cd /mnt/data

Now you can copy a file into your dCache

[root] #dccp /bin/sh my-test-file-2735004 bytes (718 kiB) in 0 seconds

and copy the data back using the dccp command.

[root] #dccp my-test-file-2 /tmp/mytestfile2735004 bytes (718 kiB) in 0 seconds

The file has been transferred succesfully.

Now remove the file from the dCache.

[root] #rm my-test-file-2

In dCache digital certificates are used for authentication and authorisation. To be able to verify the chain of trust when using the non-commercial grid-certificates you should install the list of certificates of grid Certification Authorities (CAs). In case you are using commercial certificates you will find the list of CAs in your browser.

[root] #wget http://grid-deployment.web.cern.ch/grid-deployment/glite/repos/3.2/lcg-CA.repo--2011-02-10 10:26:10-- http://grid-deployment.web.cern.ch/grid-deployment/glite/repos/3.2/lcg-CA.repo Resolving grid-deployment.web.cern.ch... 137.138.142.33, 137.138.139.19 Connecting to grid-deployment.web.cern.ch|137.138.142.33|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 449 [text/plain] Saving to: `lcg-CA.repo' 100%[====================================================================>] 449 --.-K/s in 0s 2011-02-10 10:26:10 (61.2 MB/s) - `lcg-CA.repo' saved [449/449][root] #mv lcg-CA.repo /etc/yum.repos.d/[root] #yum install lcg-CALoaded plugins: allowdowngrade, changelog, kernel-module CA | 951 B 00:00 CA/primary | 15 kB 00:00 CA ...

You will need a server certificate for the host on which your

dCache is running and a user certificate. The host certificate

needs to be copied to the directory /etc/grid-security/ on your server

and converted to hostcert.pem and

hostkey.pem as described in Using X.509

Certificates. Your user certificate is usually located in

.globus. If it is not

there you should copy it from your browser to .globus and convert the

*.p12 file to

usercert.pem and

userkey.pem.

Example:

If you have the clients installed on the machine on which your dCache is running you will need to add a user to that machine in order to be able to execute the voms-proxy-init command and execute voms-proxy-init as this user.

[root] #useradd johndoe

Change the password of the new user in order to be able to copy files to this account.

[root] #passwd johndoeChanging password for user johndoe. New UNIX password: Retype new UNIX password: passwd: all authentication tokens updated successfully.[root] #su johndoe[user] $cd[user] $mkdir .globus

Copy your key files from your local machine to the new user on the machine where the dCache is running.

[user] $scp .globus/user*.pem johndoe@<dcache.example.org>:.globus

Install glite-security-voms-clients (contained in the gLite-UI).

[root] #yum install glite-security-voms-clients

Generate a proxy certificate using the command voms-proxy-init.

Example:

[user] $voms-proxy-initEnter GRID pass phrase: Your identity: /C=DE/O=GermanGrid/OU=DESY/CN=John Doe Creating proxy .............................................. Done Your proxy is valid until Mon Mar 7 22:06:15 2011

With voms-proxy-init -voms

<yourVO> you can add VOMS

attributes to the proxy. A user’s roles (Fully Qualified

Attribute Names) are read from the certificate chain found within

the proxy. These attributes are signed by the user’s VOMS server

when the proxy is created. For the voms-proxy-init -voms

command you need to have the file

/etc/vomses which contains entries

about the VOMS servers like

Example:

"desy" "grid-voms.desy.de" "15104" "/C=DE/O=GermanGrid/OU=DESY/CN=host/grid-voms.desy.de" "desy" "24"

"atlas" "voms.cern.ch" "15001" "/DC=ch/DC=cern/OU=computers/CN=voms.cern.ch" "atlas" "24"

"dteam" "lcg-voms.cern.ch" "15004" "/DC=ch/DC=cern/OU=computers/CN=lcg-voms.cern.ch" "dteam" "24"

"dteam" "voms.cern.ch" "15004" "/DC=ch/DC=cern/OU=computers/CN=voms.cern.ch" "dteam" "24"

Now you can generate your voms proxy containing your VO.

Example:

[user] $voms-proxy-init -voms desyEnter GRID pass phrase: Your identity: /C=DE/O=GermanGrid/OU=DESY/CN=John Doe Creating temporary proxy ................................... Done Contacting grid-voms.desy.de:15104 [/C=DE/O=GermanGrid/OU=DESY/CN=host/grid-voms.desy.de] "desy" Done Creating proxy .................... Done Your proxy is valid until Thu Mar 31 21:49:06 2011

Authentication and authorization in dCache is done by the

gplazma service. Define this service in the layout file.

[gPlazmaDomain] [gPlazmaDomain/gplazma]

In this tutorial we will use the gplazmalite-vorole-mapping

plugin. To this end you need to edit the

/etc/grid-security/grid-vorolemap and the

/etc/grid-security/storage-authzdb as well

as the

/etc/dcache/dcachesrm-gplazma.policy.

Example:

The /etc/grid-security/grid-vorolemap:

"/C=DE/O=GermanGrid/OU=DESY/CN=John Doe" "/desy" doegroup

The /etc/grid-security/storage-authzdb:

version 2.1 authorize doegroup read-write 12345 1234 / / /

The /etc/dcache/dcachesrm-gplazma.policy:

# Switches

xacml-vo-mapping="OFF"

saml-vo-mapping="OFF"

kpwd="OFF"

grid-mapfile="OFF"

gplazmalite-vorole-mapping="ON"

# Priorities

xacml-vo-mapping-priority="5"

saml-vo-mapping-priority="2"

kpwd-priority="3"

grid-mapfile-priority="4"

gplazmalite-vorole-mapping-priority="1"

If you want to copy files into dCache with GSIdCap, SRM or

WebDAV with certificates you need to follow the instructions

in the section above.

To use GSIdCap you must run a GSIdCap door. This is

achieved by including the gsidcap service in your

layout file on the machine you wish to host the door.

[gsidcapDomain] [gsidcapDomain/gsidcap]

In addition, you need to have libdcap-tunnel-gsi installed on your worker node, which is contained in the gLite-UI.

Note

As ScientificLinux 5 32bit is not supported by gLite there is no libdcap-tunnel-gsi for SL5 32bit.

[root] #yum install libdcap-tunnel-gsi

It is also available on the dCap downloads

page.

Example:

[root] #rpm -i http://www.dcache.org/repository/yum/sl5/x86_64/RPMS.stable//libdcap-tunnel-gsi-2.47.5-0.x86_64.rpm

The machine running the GSIdCap door needs to have a host

certificate and you need to have a valid user

certificate. In addition, you should have created a voms proxy as mentioned

above.

Now you can copy a file into your dCache using GSIdCap

[user] $dccp /bin/sh gsidcap://<dcache.example.org>:22128/data/world-writable/my-test-file3801512 bytes in 0 seconds

and copy it back

[user] $dccp gsidcap://<dcache.example.org>:22128/data/world-writable/my-test-file3 /tmp/mytestfile3.tmp801512 bytes in 0 seconds

To use the SRM you need to define the srm service

in your layout file.

[srmDomain] [srmDomain/srm]

In addition, the user needs to install an SRM client for

example the dcache-srmclient, which

is contained in the gLite-UI, on the worker node and set

the PATH environment variable.

[root] #yum install dcache-srmclient

You can now copy a file into your dCache using the SRM,

[user] $srmcp -2 file:////bin/sh srm://<dcache.example.org>:8443/data/world-writable/my-test-file4

copy it back

[user] $srmcp -2 srm://<dcache.example.org>:8443/data/world-writable/my-test-file4 file:////tmp/mytestfile4.tmp

and delete it

[user] $srmrm -2 srm://<dcache.example.org>:8443/data/world-writable/my-test-file4

If the grid functionality is not required the file can be

deleted with the NFS mount of the Chimera namespace:

[user] $rm/data/world-writable/my-test-file4

To use WebDAV with certificates you change the entry in

/etc/dcache/layouts/mylayout.conf from

[webdavDomain] [webdavDomain/webdav] webdavAnonymousAccess=FULL webdavRootPath=/data/world-writable

to

[webdavDomain] [webdavDomain/webdav] webdavAnonymousAccess=NONE webdavRootPath=/data/world-writable webdavProtocol=https

Then you will need to import the host certificate into the dCache keystore using the command

[root] #dcache import hostcert

and initialise your truststore by

[root] #dcache import cacerts

Now you need to restart the WebDAV domain

[root] #dcache restart webdavDomain

and access your files via

https://<dcache.example.org>:2880

with your browser.

Important

If the host certificate contains an extended key usage

extension, it must include the extended usage for server

authentication. Therefore you have to make sure that your

host certificate is either unrestricted or it is explicitly

allowed as a certificate for TLS Web Server

Authentication.

You can also choose to have secure and insecure access to your files at the same time. You might for example allow access without authentication for reading and access with authentication for reading and writing.

[webdavDomain] [webdavDomain/webdav] webdavRootPath=/data/world-writable webdavAnonymousAccess=READONLY port=2880 webdavProtocol=https

You can access your files via

https://<dcache.example.org>:2880

with your browser.

In the standard configuration the dCache web interface is

started on the head node (meaning that the domain hosting the

httpd service is running on the head node) and can be

reached via port 2288. Point a web browser to

http://<head-node.example.org>:2288/

to get to the main menu of the dCache web interface. The

contents of the web interface are self-explanatory and are the

primary source for most monitoring and trouble-shooting tasks.

The “Cell Services” page displays the status of some important cells of the dCache instance.

The “Pool Usage” page gives a good overview of the current space usage of the whole dCache instance. In the graphs, free space is marked yellow, space occupied by cached files (which may be deleted when space is needed) is marked green, and space occupied by precious files, which cannot be deleted is marked red. Other states (e.g., files which are currently written) are marked purple.

The page “Pool Request Queues” (or “Pool Transfer Queues”) gives information about the number of current requests handled by each pool. “Actions Log” keeps track of all the transfers performed by the pools up to now.

The remaining pages are only relevant with more advanced configurations: The page “Pools” (or “Pool Attraction Configuration”) can be used to analyze the current configuration of the pool selection unit in the pool manager. The remaining pages are relevant only if a tertiary storage system (HSM) is connected to the dCache instance.

In this section we will have a look at the configuration and log files of dCache.

The dCache software is installed in various directories

according to the Filesystem Hierarchy Standard. All

configuration files can be found in /etc/dcache.

In the previous section we have already seen how a domain is restarted:

[root] #dcache restart <domainName>

Log files of domains are by default stored in

/var/log/dcache/<domainName>.log. We

strongly encourage to configure logrotate to rotate the dCache

log files to avoid filling up the log file system. This can

typically be achieved by creating the file

/etc/logrotate.d/dcache with the following

content:

/var/log/dcache/*.log {

compress

rotate 100

missingok

copytruncate

}More details about domains and cells can be found in Chapter 6, The Cell Package.

The most central component of a dCache instance is the

PoolManager cell. It reads additional configuration

information from the file

/var/lib/dcache/config/poolmanager.conf at

start-up. However, it is not necessary to restart the domain

when changing the file. We will see an example of this below.

Similar to /var/lib/dcache/config/poolmanager.conf,

pools read their configuration from

<poolDir>/pool/setup

at startup.

Just use commands that are documented here

Only commands described in this documentation should be used for the administration of a dCache system.

Note

If you attempt to log into the admin interface without

generating the ssh-keys you will get an error message.

[user] $ssh -c blowfish -p 22223 -l admin headnode.example.orgConnection closed by 192.0.2.11

dCache has a powerful administration interface. It is

accessed with the ssh protocol. The server is part of the

adminDoor domain. Connect to it with

[user] $ssh -c blowfish -p 22223 -l admin headnode.example.org

The initial password is

“dickerelch” (which is German

for “fat elk”) and you will be greeted by the

prompt

dCache Admin (VII) (user=admin)

(local) admin >The password can now be changed with

(local) admin >cd acm(acm) admin >create user admin(acm) admin >set passwd -user=admin <newPasswd> <newPasswd>(acm) admin >..(local) admin >logoff

This already illustrates how to navigate within the administration interface:

Starting from the local prompt ((local) admin >) the command

cd takes you to the specified cell (here acm, the access

control manager). There two commands are executed. The escape

sequence .. takes you back to the local

prompt and logoff exits the admin shell.

There also is the command help for listing all commands the cell knows and their parameters. However, many of the commands are only used for debugging and development purposes.

Note

If the cells are well-known, they can be accessed without adding the domain-scope. See Chapter 6, The Cell Package for more information.

The domains that are running on the dCache-instance, can be

viewed in the layout-configuration (see Chapter 2, Installing dCache). Additionally, there is the topo cell, which keeps

track of the instance’s domain topology. If it is running, it

can be used to obtain the list of domains the following way:

Example:

(local) admin >cd topo(topo) admin >lsadminDoorDomain gsidcapDomain dcapDomain utilityDomain gPlazmaDomain webdavDomain gridftpDomain srmDomain dCacheDomain httpdDomain namespaceDomain poolDomain

Note

The topo cell rescans periodically which domains are

running, so it can take some time until ls

displays the full domain list.

If you want to find out which cells are running on a certain

domain, you can issue the command ps in the

System cell of the domain.

Example:

For example, if you want to list the cells running on the

poolDomain, cd to its

System cell and issue the ps

command.

(topo) admin >..(local) admin >cd System@poolDomain(System@poolDomain) admin >psCell List ------------------ c-dCacheDomain-101-102 System pool_2 c-dCacheDomain-101 pool_1 RoutingMgr lm

The cells in the domain can be accessed using cd together with the cell-name scoped by the domain-name. So first, one has to get back to the local prompt, as the cd command will not work otherwise.

Note

Note that cd only works from the local prompt. If the cell you are trying to access does not exist, the cd command will complain.

Example:

(local) admin >cd nonsensejava.lang.IllegalArgumentException: Cannot cd to this cell as it doesn't exist

Type .. to return to the (local) admin >

prompt.

Login to the routing manager of the dCacheDomain to get a

list of all well-known cells you can directly

cd to without having to add the domain.

Example:

(System@poolDomain) admin >..(local) admin >cd RoutingMgr@dCacheDomain(RoutingMgr@dCacheDoorDomain) admin >lsOur routing knowledge : Local : [PoolManager, topo, broadcast, LoginBroker, info] adminDoorDomain : [pam] gsidcapDomain : [DCap-gsi-example.dcache.org] dcapDomain : [DCap-example.dcache.org] utilityDomain : [gsi-pam, PinManager] gPlazmaDomain : [gPlazma] webdavDomain : [WebDAV-example.dcache.org] gridftpDomain : [GFTP-example.dcache.org] srmDomain : [RemoteTransferManager, CopyManager, SrmSpaceManager, SRM-example.dcache.org] httpdDomain : [billing, srm-LoginBroker, TransferObserver] poolDomain : [pool_2, pool_1] namespaceDomain : [PnfsManager, dirLookupPool, cleaner]

All cells know the commands info for general information about the cell and show pinboard for listing the last lines of the pinboard of the cell. The output of these commands contains useful information for solving problems.

It is a good idea to get aquainted with the normal output in the

following cells: PoolManager, PnfsManager, and the pool

cells (e.g., <poolHostname>_1).

The most useful command of the pool cells is rep ls. To execute this command

cd into the pool. It lists the files which

are stored in the pool by their pnfs IDs:

Example:

(RoutingMgr@dCacheDoorDomain) admin >..(pool_1) admin >rep ls000100000000000000001120 <-P---------(0)[0]> 485212 si={myStore:STRING} 000100000000000000001230 <C----------(0)[0]> 1222287360 si={myStore:STRING}

Each file in a pool has one of the 4 primary states:

“cached” (<C---),

“precious” (<-P--),

“from client” (<--C-), and

“from store” (<---S).

See the section called “How to Store-/Restore files via the Admin Interface” for more information about rep ls.

The most important commands in the PoolManager are: rc ls and cm ls -r.

rc ls lists the requests currently handled

by the PoolManager. A typical line of output for a read request

with an error condition is (all in one line):

Example:

(pool_1) admin >..(local) admin >cd PoolManager(PoolManager) admin >rc ls000100000000000000001230@0.0.0.0/0.0.0.0 m=1 r=1 [<unknown>] [Waiting 08.28 19:14:16] {149,No pool candidates available or configured for 'staging'}

As the error message at the end of the line indicates, no pool was found containing the file and no pool could be used for staging the file from a tertiary storage system.

See the section called “Obtain information via the dCache Command Line Admin Interface” for more information about the command rc ls

Finally, cm ls with the option

-r gives the information about the pools

currently stored in the cost module of the pool manager. A

typical output is:

Example:

(PoolManager) admin >cm lspool_1={R={a=0;m=2;q=0};S={a=0;m=2;q=0};M={a=0;m=100;q=0};PS={a=0;m=20;q=0};PC={a=0;m=20;q=0}; (...continues...) SP={t=2147483648;f=924711076;p=1222772572;r=0;lru=0;{g=20000000;b=0.5}}} pool_1={Tag={{hostname=example.org}};size=0;SC=0.16221282938326134;CC=0.0;} pool_2={R={a=0;m=2;q=0};S={a=0;m=2;q=0};M={a=0;m=100;q=0};PS={a=0;m=20;q=0};PC={a=0;m=20;q=0}; (...continues...) SP={t=2147483648;f=2147483648;p=0;r=0;lru=0;{g=4294967296;b=250.0}}} pool_2={Tag={{hostname=example.org}};size=0;SC=2.7939677238464355E-4;CC=0.0;}-r

While the first line for each pool gives the information stored in the cache of the cost module, the second line gives the costs (SC: space cost, CC: performance cost) calculated for a (hypothetical) file of zero size. For details on how these are calculated and their meaning, see the section called “The Cost Module”.

To create a new user, <new-user> and set a new password for

the user cd from the local prompt

((local) admin >) to the acm, the access control

manager, and run following command sequence:

(local) admin >cd acm(acm) admin >create user <new-user>(acm) admin >set passwd -user=<new-user> <newPasswd> <newPasswd>

For the new created users there will be an entry in the

directory /etc/dcache/admin/users/meta.

Note

As the initial user admin has not been

created with the above command you will not find him in the

directory /etc/dcache/admin/users/meta.

Give the new user access to a particular cell:

(acm) admin >create acl cell.<cellName>.execute(acm) admin >add access -allowed cell.<cellName>.execute <new-user>

Example:

Give the new user access to the PnfsManager.

(acm) admin >create acl cell.PnfsManager.execute(acm) admin >add access -allowed cell.PnfsManager.execute <new-user>

Now you can check the permissions by:

(acm) admin >check cell.PnfsManager.execute <new-user>Allowed(acm) admin >show acl cell.PnfsManager.execute<noinheritance> <new-user> -> true

The following commands allow access to every cell for a user <new-user>:

(acm) admin >create acl cell.*.execute(acm) admin >add access -allowed cell.*.execute <new-user>

The following command makes a user as powerful as admin

(dCache’s equivalent to the root user):

(acm) admin >create acl *.*.*(acm) admin >add access -allowed *.*.* <new-user>

The ssh admin interface can be used non-interactively by

scripts. For this the dCache-internal ssh server uses

public/private key pairs.

The file /etc/dcache/authorized_keys~/.ssh/authorized_keys which is used by

sshd. The keys in

/etc/dcache/authorized_keys

[user] $ssh-keygen -t rsa1 -C 'SSH1 key of <user>'Generating public/private rsa1 key pair. Enter file in which to save the key (/home/<user>/.ssh/identity): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/<user>/.ssh/identity. Your public key has been saved in /home/<user>/.ssh/identity.pub. The key fingerprint is: c1:95:03:6a:66:21:3c:f3:ee:1b:8d:cb:46:f4:29:6a SSH1 key of <user>

The passphrase is used to encrypt the private key (now stored in

/home/<user>/.ssh/identity). If

you do not want to enter the passphrase every time the private

key is used, you can use ssh-add to add it to

a running ssh-agent. If no agent is running

start it with

[user] $if [ -S $SSH_AUTH_SOCK ] ; then echo "Already running" ; else eval `ssh-agent` ; fi

and add the key to it with

[user] $ssh-addEnter passphrase for SSH1 key of <user>: Identity added: /home/<user>/.ssh/identity (SSH1 key of <user>)

Now, insert the public key

~/.ssh/identity.pub as a separate line into

/etc/dcache/authorized_keys

1024 35 141939124(... many more numbers ...)15331 admin

Using ssh-add -L >> /etc/dcache/authorized_keys will not work, because the line added is not correct.

The key manager within dCache will read this file every minute.

Now, the ssh program should not ask for a password anymore. This

is still quite secure, since the unencrypted private key is only

held in the memory of the ssh-agent. It can

be removed from it with

[user] $ssh-add -dIdentity removed: /home/<user>/.ssh/identity (RSA1 key of <user>)

In scripts, one can use a “Here Document” to list the commands, or supply them to ssh as standard-input (stdin). The following demonstrates using a Here Document:

#!/bin/sh

#

# Script to automate dCache administrative activity

outfile=/tmp/$(basename $0).$$.out

ssh -c blowfish -p 22223 admin@<adminNode> > $outfile << EOF

cd PoolManager

cm ls -r

(more commands here)

logoff

EOFor, the equivalent as stdin.

#!/bin/bash

#

# Script to automate dCache administrative activity.

echo -e 'cd <pool_1>\nrep ls\n(more commands here)\nlogoff' \

| ssh -c blowfish -p 22223 admin@<adminNode> \

| tr -d '\r' > rep_ls.outThis part contains descriptions of the components of dCache, their role, functionality within the framework. In short, all information necessary for configuring them.

Table of Contents

- 4. Chimera

- 5.

pnfs - 6. The Cell Package

- 7. The

replicaService (Replica Manager) - 8. The

poolmanagerService - 9. The dCache Tertiary Storage System Interface

- Introduction

- Scope of this chapter

- Requirements for a Tertiary Storage System

- How dCache interacts with a Tertiary Storage System

- Details on the TSS-support

executable - Configuring pools to interact with a Tertiary Storage System

- How to Store-/Restore files via the Admin Interface

- How to monitor what’s going on

- Example of an

executableto simulate a tape backend

- 10. File Hopping

- 11. dCache Partitioning

- 12. Authorization in dCache

- 13. dCache as xRootd-Server

- 14. dCache Storage Resource Manager

- 15. The

statisticsService - 16. dCache Webadmin-Interface

- 17. ACLs in dCache

- 18. GLUE Info Provider

- 19. Stage Protection

Table of Contents

dCache is a distributed storage system, nevertheless it provides a single-rooted file system view. While dCache supports multiple namespace providers, Chimera is the recommended provider and is used by default.

The inner dCache components talk to the namespace via a module

called PnfsManager, which in turn communicates with the

Chimera database using a thin Java layer, which in turn

communicates directly with the Chimera database. Chimera

allows direct access to the namespace by providing an NFSv3 and

NFSv4.1 server. Clients can NFS-mount the namespace locally.

This offers the opportunity to use OS-level tools like

ls, mkdir, mv for Chimera. Direct

I/O-operations like cp and cat are possible with

the NFSv4.1 door.

The properties of Chimera are defined in

/usr/share/dcache/defaults/chimera.properties. For

customisation the files

/etc/dcache/layouts/mylayout.conf or

/etc/dcache/dcache.conf should be

modified (see the section called “Defining domains and services”).

Example:

This example shows an extract of the

/etc/dcache/layouts/mylayout.conf

file in order to run dCache with NFSv3.

[namespaceDomain] [namespaceDomain/pnfsmanager] [namespaceDomain/nfsv3]

Example:

If you want to run the NFSv4.1 server you need to add the

corresponding nfsv41 service to a domain in the

/etc/dcache/layouts/mylayout.conf

file and start this domain.

[namespaceDomain] [namespaceDomain/pnfsmanager] [namespaceDomain/nfsv41]

If you wish dCache to access your Chimera with a PostgreSQL user

other than chimera then you must specify the username and

password in /etc/dcache/dcache.conf.

chimera.db.user=myuser chimera.db.password=secret

Important

Do not update configuration values in

/usr/share/dcache/defaults/chimera.properties,

since changes to this file will be overwritten by updates.

dCache does not need the Chimera filesystem to be mounted but

a mounted file system is convenient for administrative access.

This offers the opportunity to use OS-level tools like

ls and mkdir for

Chimera. However, direct I/O-operations like

cp are not possible, since the NFSv3 interface

provides the namespace part only. This section describes how to

start the Chimera NFSv3 server and mount the name space.

If you want to mount Chimera for easier administrative access,

you need to edit the /etc/exports file as

the Chimera NFS server uses it to manage exports. If this

file doesn’t exist it must be created. The typical

exports file looks like this:

/ localhost(rw) /data # or # /data *.my.domain(rw)

As any RPC service Chimera NFS requires the rpcbind service to run on the

host. Nevertheless rpcbind has to be configured to

accept requests from Chimera NFS.

On RHEL6 based systems you need to add

RPCBIND_ARGS="-i"

into /etc/sysconfig/rpcbind and restart

rpcbind. Check your OS

manual for details.

[root] #service rpcbind restartStopping rpcbind: [ OK ] Starting rpcbind: [ OK ]

If your OS does not provide rpcbind

Chimera NFS can use an embedded rpcbind.

This requires to disable the portmap

service if it exists.

[root] #/etc/init.d/portmap stopStopping portmap: portmap

and restart the domain in which the NFS server is running.

Example:

[root] #dcache restart namespaceDomain

Now you can mount Chimera by

[root] #mount localhost:/ /mnt

and create the root of the Chimera namespace which you can call

data:

[root] #mkdir -p /mnt/data

If you don’t want to mount chimera you can create the root of the Chimera namespace by

[root] #/usr/bin/chimera-cli Mkdir /data

You can now add directory tags. For more information on tags see the section called “Directory Tags”.

[root] #echo "chimera" | /usr/bin/chimera-cli Writetag /data sGroup[root] #echo "StoreName sql" | /usr/bin/chimera-cli Writetag /data OSMTemplate

If you plan to use dCap with a mounted file system instead of

the URL-syntax (e.g. dccp

/data/file1

/tmp/file1), you need to mount the root of

Chimera locally (remote mounts are not allowed yet). This will

allow us to establish wormhole files so dCap clients can

discover the dCap doors.

[root] #mount localhost:/ /mnt[root] #mkdir /mnt/admin/etc/config/dCache[root] #touch /mnt/admin/etc/config/dCache/dcache.conf[root] #touch /mnt/admin/etc/config/dCache/'.(fset)(dcache.conf)(io)(on)'[root] #echo "<door host>:<port>" > /mnt/admin/etc/config/dCache/dcache.conf

The default values for ports can be found in Chapter 29, dCache Default Port Values (for dCap the default port is

22125) and in the file

/usr/share/dcache/defaults/dcache.properties. They

can be altered in

/etc/dcache/dcache.conf

When the configuration is complete you can unmount Chimera:

[root] #umount /mnt

Note

Please note that whenever you need to change the configuration,

you have to remount the root localhost:/ to a

temporary location like /mnt.

Many configuration parameters of Chimera and the

application specific meta data is accessed by reading, writing, or

creating files of the form

.(<command>)(<para>).

For example, the following prints the ChimeraID of the file

/data/some/dir/file.dat:

[user] $cat /data/any/sub/directory/'.(id)(file.dat)'0004000000000000002320B8[user] $

From the point of view of the NFS protocol, the file

.(id)(file.dat) in the directory /data/some/dir/ is read. However,

Chimera interprets it as the command id with

the parameter file.dat executed in the

directory /data/some/dir/. The quotes are

important, because the shell would otherwise try to interpret the

parentheses.

Some of these command files have a second parameter in a third

pair of parentheses. Note, that files of the form

.(<command>)(<para>)

are not really files. They are not shown when listing directories

with ls. However, the command files are listed

when they appear in the argument list of ls as

in

[user] $ls -l '-rw-r--r-- 11 root root 7 Aug 6 2010 .(tag)(sGroup).(tag)(sGroup)'

Only a subset of file operations are allowed on these special command files. Any other operation will result in an appropriate error. Beware, that files with names of this form might accidentally be created by typos. They will then be shown when listing the directory.

Each file in Chimera has a unique 18 byte long ID. It is referred to as ChimeraID or as pnfsID. This is comparable to the inode number in other filesystems. The ID used for a file will never be reused, even if the file is deleted. dCache uses the ID for all internal references to a file.

Example:

The ID of the file

example.org/data/examplefile can be

obtained by reading the command-file

.(id)(examplefile) in the directory of

the file.

[user] $cat /example.org/data/'.(id)(examplefile)'0000917F4A82369F4BA98E38DBC5687A031D

A file in Chimera can be referred to by the ID for most operations.

Example:

The name of a file can be obtained from the ID

with the command nameof as follows:

[user] $cd /example.org/data/[user] $cat '.(nameof)(0000917F4A82369F4BA98E38DBC5687A031D)'examplefile

And the ID of the directory it resides in is obtained by:

[user] $cat '.(parent)(0000917F4A82369F4BA98E38DBC5687A031D)'0000595ABA40B31A469C87754CD79E0C08F2

This way, the complete path of a file may be obtained starting from the ID.

In the Chimera namespace, each directory can have a number of tags. These directory tags may be used within dCache to control the file placement policy in the pools (see the section called “The Pool Selection Mechanism”). They might also be used by a tertiary storage system for similar purposes (e.g. controlling the set of tapes used for the files in the directory).

Note

Directory tags are not needed to control the behaviour of dCache. dCache works well without directory tags.

You can create tags with

[user] $echo "<content>" | /usr/bin/chimera-cli writetag <directory> <tagName>

list tags with

[user] $/usr/bin/chimera-cli lstag <directory>

and read tags with

[user] $/usr/bin/chimera-cli readtag <directory> <tagName>

Example:

Create tags for the directory data with

[user] $echo "myGroup" | /usr/bin/chimera-cli writetag /data sGroup[user] $echo "StoreName myStore" | /usr/bin/chimera-cli writetag /data OSMTemplate

list the existing tags with

[user] $/usr/bin/chimera-cli lstag /dataTotal: 2 OSMTemplate sGroup

and their content with

[user] $/usr/bin/chimera-cli readtag /data OSMTemplateStoreName myStore[user] $/usr/bin/chimera-cli readtag /data sGroupmyGroup

If the namespace is mounted, change to the directory for which the tag should be set and create a tag with

[user] $cd <directory>[user] $echo '<content1>' > '.(tag)(<tagName1>)'[user] $echo '<content2>' > '.(tag)(<tagName2>)'

Then the existing tags may be listed with

[user] $cat '.(tag)(<tagname1>) .(tag)(<tagname2>).(tags)()'

and the content of a tag can be read with

[user] $cat '<content1>.(tag)(<tagname1>)'[user] $cat '<content2>.(tag)(<tagName2>)'

Example:

Create tags for the directory data with

[user] $cd data[user] $echo 'StoreName myStore' > '.(tag)(OSMTemplate)'[user] $echo 'myGroup' > '.(tag)(sGroup)'

list the existing tags with

[user] $cat '.(tag)(OSMTemplate) .(tag)(sGroup).(tags)()'

and their content with

[user] $cat 'StoreName myStore.(tag)(OSMTemplate)'[user] $cat 'myGroup.(tag)(sGroup)'

A nice trick to list all tags with their contents is

[user] $grep "" $(cat ".(tags)()").(tag)(OSMTemplate):StoreName myStore .(tag)(sGroup):myGroup

When creating or changing directory tags by writing to the command file as in

[user] $echo '<content>' > '.(tag)(<tagName>)'

one has to take care not to treat the command files in the same way as regular files, because tags are different from files in the following aspects:

The <tagName> is limited to 62 characters and the <content> to 512 bytes. Writing more to the command file, will be silently ignored.

If a tag which does not exist in a directory is created by writing to it, it is called a primary tag.

Tags are inherited from the parent directory by a newly created directory. Changing a primary tag in one directory will change the tags inherited from it in the same way. Creating a new primary tag in a directory will not create an inherited tag in its subdirectories.

Moving a directory within the Chimera namespace will not change the inheritance. Therefore, a directory does not necessarily inherit tags from its parent directory. Removing an inherited tag does not have any effect.

Empty tags are ignored.

The following directory tags appear in the dCache context:

- OSMTemplate

Must contain a line of the form “

StoreName<storeName>” and specifies the name of the store that is used by dCache to construct the storage class if the HSM Type isosm.- HSMType

The

HSMTypetag is normally determined from the other existing tags. E.g., if the tagOSMTemplateexists,HSMType=osmis assumed. With this tag it can be set explicitly. A class implementing that HSM type has to exist. Currently the only implementations areosmandenstore.- sGroup

The storage group is also used to construct the storage class if the

HSMTypeisosm.- cacheClass

The cache class is only used to control on which pools the files in a directory may be stored, while the storage class (constructed from the two above tags) might also be used by the HSM. The cache class is only needed if the above two tags are already fixed by HSM usage and more flexibility is needed.

- hsmInstance

If not set, the

hsmInstancetag will be the same as theHSMTypetag. Setting this tag will only change the name as used in the storage class and in the pool commands.

The storage class is a

string of the form

<StoreName>:<StorageGroup>@<hsm-type>,

where <StoreName> is given by the

OSMTemplate tag,

<StorageGroup> by the

sGroup tag and

<hsm-type> by the

HSMType tag. As mentioned above the

HSMType tag is assumed to be

osm if the tag OSMTemplate

exists.

In the examples above two tags have been created.

Example:

[user] $/usr/bin/chimera-cli lstag /dataTotal: 2 OSMTemplate sGroup

As the tag OSMTemplate was created the tag

HSMType is assumed to be osm.

The storage class of the files which are copied into the

directory /data after

the tags have been set will be

myStore:myGroup@osm.

If directory tags are used to control the behaviour of dCache and/or a tertiary storage system, it is a good idea to plan the directory structure in advance, thereby considering the necessary tags and how they should be set up. Moving directories should be done with great care or even not at all. Inherited tags can only be created by creating a new directory.

Example:

Assume that data of two experiments,

experiment-a and

experiment-b is written into a namespace

tree with subdirectories /data/experiment-a and /data/experiment-b. As some pools

of the dCache are financed by

experiment-a and others by